Nvidia announced its Ampere architecture, A100 GPU, and DGX A100 AI systems last week, among other things, during its first ever Kitchen Keynote. CEO Jensen Huang boasted of the next gen GPU technology behind these innovations, and the capabilities they would unleash for users. If you are looking for an alternative way to bring Ampere in-house for your GPU-accelerated HPC and artificial intelligence (AI) processing Gigabyte is offering four new choices.

Gigabyte has announced four new servers in two server series, as per the product matrix below:

|

|

Nvidia HGX A100 8-GPU |

Nvidia HGX A100 4-GPU |

|

2nd Gen AMD Epyc |

G492-ZD0 |

G262-ZR0 |

|

3rd Gen Intel Xeon Scalable |

G492-ID0 |

G262-IR0 |

You can see above that Gigabyte is offering systems that basically match the DGX A100 spec - with eight Nvidia A100 GPUs and using 2nd Gen AMD Epyc (Rome) processors. However, you can also choose a server with eight A100 GPUs and a 3rd Gen Intel Xeon Scalable processor for doing the general purpose computing and system housekeeping duties, if you prefer.

Perhaps you don't need the full might, and expense, of eight Ampere A100 GPUs? Then Gigabyte has you covered with the new four GPU machines, which join its G262 family of servers, again with AMD and Intel CPU choices.

At the time of writing its seems to be the case that Gigabyte hasn't yet published official product pages for the above servers but in similarly codenamed configurations the AMD-based servers are dual-processor based but the Intel Xeon Scalable offerings use a single processor.

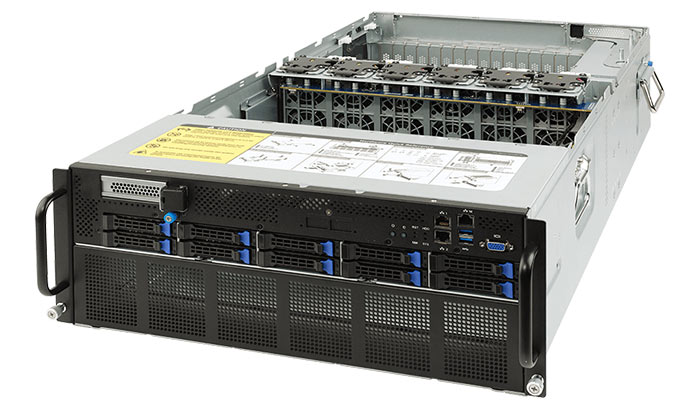

A few details of the Gigabyte G262/G492 series servers that we do know are that they are modular 2U and 4U designs, respectively. Gigabyte uses a simplified GPU and CPU partitioned layout for improved air tunnelling and to prevent heat build-up. Lastly, the systems use 80+ high efficiency power supplies and implemented N + 1 redundancy to ensure users a safe data environment.