Nvidia has doubled the A100 GPU memory quota to 80GB HBM2e and shoehorned four A100 accelerator cards into a new workstation dubbed the second-gen DGX Station A100. The green team took the wraps off these new HPC products at the SC20 virtual conference yesterday. It builds on the technology unveiled in Jensen Huang's kitchen (AKA the GTC digital keynote) back in May, so, yes these new products are based upon the Ampere architecture that has only recently begun filtering down to consumers (is the filter blocked?).

"DGX Station A100 brings AI out of the data centre with a server-class system that can plug in anywhere," said Charlie Boyle, VP and GM of DGX systems at Nvidia. "Teams of data science and AI researchers can accelerate their work using the same software stack as Nvidia DGX A100 systems, enabling them to easily scale from development to deployment."

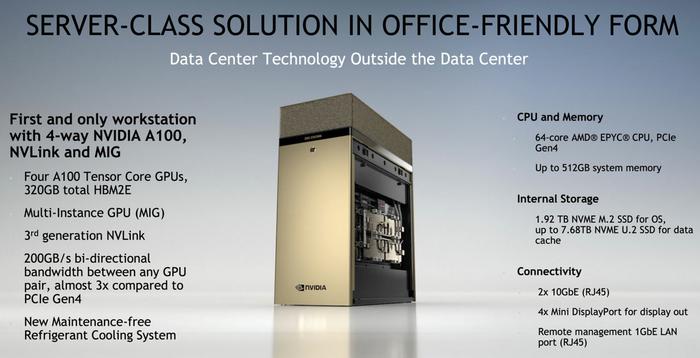

According to Nvidia the new DGX Station is the world's only petascale workgroup server. With four A100 GPU cards inside, talking to each other via NVLink, it delivers up to 2.5 petaflops of AI performance. The quad-A100 system has up to 320GB of HBM2e at its disposal if a customer selects the new A100 GPU cards with 80GB on each. Compared with previous gen DGX Stations, Nvidia says the latest iteration is between 3x and 4x faster in AI workloads.

Special mention is given to multi-instance GPU (MIG) as being a particular attraction of the DGX Station. Thanks to this tech a single DGX Station A100 provides up to 28 separate GPU instances to run parallel jobs and support multiple users without impacting system performance, it is claimed.

Nvidia already has customers using new DGX Station machines to power AI and data science projects. Some examples include; BMW using the DGX Station for operational level improvements, Lockheed Martin using the DGX Stations for workplace safety and cost reductions, and NTT Docomo using the workstations to develop AI-driven services for customers.

If the DGX Station workstation isn't beefy enough for your needs, Nvidia is also producing a new DGX A100 640GB system (yes, it uses eight A100 cards), and these can be integrated into SuperPODs allowing organisations to roll out turnkey supercomputers combining up to twenty DGX A100 systems.

The Nvidia DGX Station A100 and Nvidia DGX A100 640GB systems will be available this quarter through the usual partners. Upgrade options will be provided to current DGX A100 320GB customers.