Nvidia has quietly watered down the monitor specifications that a manufacturer's product needs to meet to gain G-Sync Ultimate certification. At CES 2021 there were a number of new G-Sync Ultimate gaming monitors released by the likes of Asus, LG and MSI but @pcmonitors noticed that the spec didn't seem as high as it used to be. After a bit of digging around and detective work using the Internet Archive's WayBackMachine it found Nvidia has significantly reworded the spec guidelines.

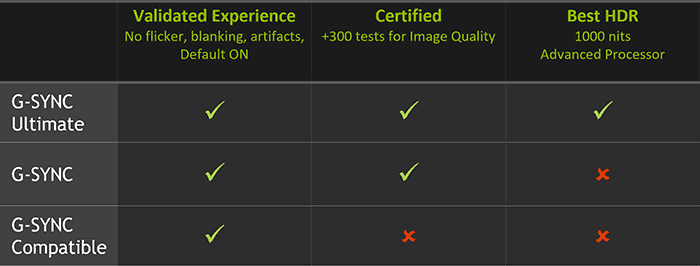

Nvidia announced its expansion of the G-Sync ecosystem back at CES 2019 and created three tiers, as we reported at the time, represented by the table below.

The main good thing about this change for the masses was that it folded lots of cheaper, accessible Adaptive Sync monitors into the S-Sync compatible category – rather than their VRR talents being wasted on systems packing GeForce graphics. Meanwhile, Nvidia boasted about G-Sync Ultimate being the cream of the crop, going some way towards justifying the prices of these monitors. A G-Sync Ultimate certified display will benefit from "a full refresh rate range from 1Hz to the display panel’s maximum rate, plus other advantages like variable overdrive, refresh rate overclocking, ultra-low motion blur display modes and industry-leading HDR with 1,000nits, full matrix backlight and DCI-P3 colour", said Nvidia at the time.

Fast-forward two years, and today the G-Sync reference chart is reproduced above. Note the pivot from the 'Best HDR 1000 nits' requirement to 'Lifelike HDR'. Moreover, @pcmonitors noted that G-Sync Ultimate used to mean VESA DisplayHDR 1000 with FALD (Full-Array Local Dimming), then it noted that some VESA DisplayHDR 600 models were getting the premium badge, and now it seems to even apply to some VESA DisplayHDR 400 models.

When Nvidia revealed the trio of G-Sync experience logos two years ago the HDR qualities of the display were the key differentiator between plain G-Sync and G-Sync Ultimate labelling (both use the G-Sync hardware module monitor-side). Now it seems to have watered down the Ultimate standard, probably to help partners lift more displays into the top/premium category. The worst thing is that the changes haven’t been highlighted to consumers who may be thinking they are going to be snagging a G-Sync Ultimate bargain when it is just the standard that has been lowered. At least Nvidia needs to update its G-Sync certification matrix with clearer detail.

Update:

The following statement from Nvidia was received by Overclock3D:

Late last year we updated G-SYNC ULTIMATE to include new display technologies such as OLED and edge-lit LCDs.

All G-SYNC Ultimate displays are powered by advanced NVIDIA G-SYNC processors to deliver a fantastic gaming experience including lifelike HDR, stunning contract, cinematic colour and ultra-low latency gameplay. While the original G-SYNC Ultimate displays were 1000 nits with FALD, the newest displays, like OLED, deliver infinite contrast with only 600-700 nits, and advanced multi-zone edge-lit displays offer remarkable contrast with 600-700 nits. G-SYNC Ultimate was never defined by nits alone nor did it require a VESA DisplayHDR1000 certification. Regular G-SYNC displays are also powered by NVIDIA G-SYNC processors as well.

The ACER X34 S monitor was erroneously listed as G-SYNC ULTIMATE on the NVIDIA web site. It should be listed as “G-SYNC” and the web page is being corrected.