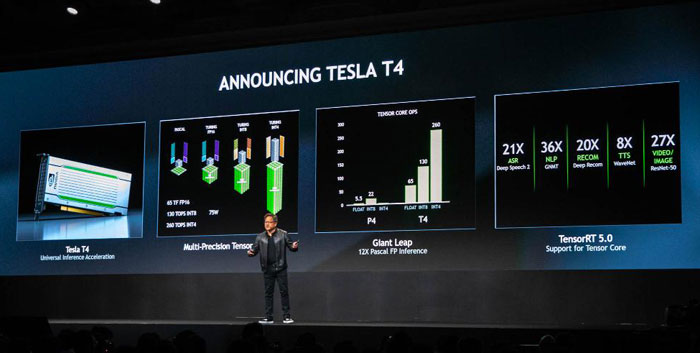

Nvidia has launched the TensorRT Hyperscale Platform, which is designed for data centres and is the industry's "most advanced inference accelerator for voice, video, image, recommendation services". The foundations for this new platform are Tesla T4 GPUs, based upon the Turing architecture, and a comprehensive set of new inference software; Tensor RT5, Tensor RT inference server, and CUDA 10. Off the back of these announcements Nvidia shared more specific industry targeted AI-powered products for robotics and drones, self-driving vehicles, and medical instrument design.

The vision of Nvidia and its keenest customers is that "every product and service will be touched and improved by AI," according to Ian Buck, VP and GM of Accelerated Business at the corporation. The new TensorRT Hyperscale Platform brings this future to reality "faster and more efficiently than had been previously thought possible," he asserted.

Nvidia breaks down its AI Inference Platform into bullet points as follows:

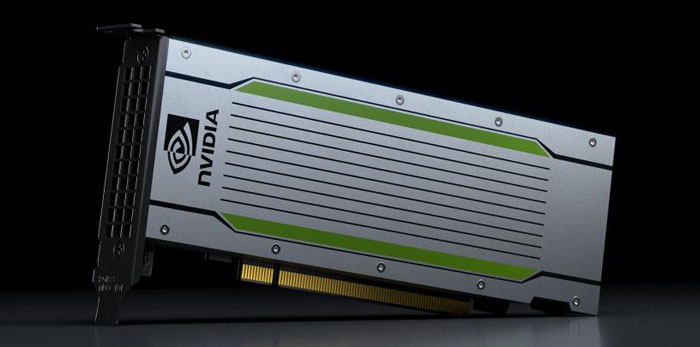

- Nvidia Tesla T4 GPU – Featuring 320 Turing Tensor Cores and 2,560 CUDA cores, this new GPU provides breakthrough performance with flexible, multi-precision capabilities, from FP32 to FP16 to INT8, as well as INT4. Packaged in an energy-efficient, 75-watt, small PCIe form factor that easily fits into most servers, it offers 65 teraflops of peak performance for FP16, 130 teraflops for INT8 and 260 teraflops for INT4.

- Nvidia TensorRT 5 – An inference optimizer and runtime engine, Nvidia TensorRT 5 supports Turing Tensor Cores and expands the set of neural network optimizations for multi-precision workloads.

- Nvidia TensorRT inference server – This containerized microservice software enables applications to use AI models in data centre production. Freely available from the NVIDIA GPU Cloud container registry, it maximizes data centre throughput and GPU utilization, supports all popular AI models and frameworks, and integrates with Kubernetes and Docker.

Already supported by heavyweight IT companies, Nvidia shared some testimonials from the likes of Microsoft (improving Bing's advanced search offerings), Cisco (data insights), HPE (enabling inference on the edge), and IBM Cognitive Systems (for 4x faster deep learning training times).

In related announcements, Nvidia made the Jetson AGX Xavier dev kit available worldwide, and you can see it was selling this for Y149,800 at the end of the conference. Yamaha has adopted Jetson AGX Xavier for autonomous land, air, sea machines, and other less well known Japanese industrials have adopted it too.

The Nvidia DRIVE AGX system dev kit became available worldwide, and Fujifilm is one of the first companies to adopt the supercomputer for healthcare, medical imaging systems, and more.

Lastly, the newly unveiled Nvidia Clara Platform, a combination of hardware and software, will bring AI to next-gen medical imaging systems to improve the diagnosis, detection and treatment of diseases.