Nvidia researchers are discussing graphics technology advancements at this week's SIGGRAPH 2021. There is an Nvidia Special Address planned for later today (8am PDT, 4pm BST), which you can watch a live stream of via my link, but there has already been some juicy info about Nvidia's latest real-time path tracing and content creation technology, which you will find outlined below. Two particular highlights are; Real-Time Path Tracing, and Neural Radiance Caching.

Real-Time Path Tracing

Path Tracing is one way to improve the realism of rendered light in a scene. Thanks to its RTX platform, mixing ray tracing and AI processing hardware, Nvidia can enable Real-Time Path Tracing. In the embedded highlights video, you can see that Nvidia shows off this tech in an animated theatre scene, with dynamic lighting in effect.

On the Nvidia blog, the graphics chipmaker talks about the use of Real-Time Path Tracing in a forest scene featuring a tiger. The tech can help render a realistic scene where "dappled sunlight filters through the leaves on the trees and grows hazy among the water molecules suspended in the foggy air." Meanwhile, the tiger prowls, illuminated by the complex light of the forest, with its reflection visible in the muddy pond-side to the foreground.

Naturally this is a complex scene with the rich visuals featuring both direct and indirect reflections. It is a task "far too resource-hungry to solve in real time," writes Nvidia, but its researchers have created a path-sampling algorithm that prioritizes the light paths and reflections most likely to contribute to the final image," rendering images over 100x more quickly than before".

Neural Radiance Caching

Neural Radiance Caching is a global illumination technique that Nvidia describes as a "breakthrough". The technique uses Nvidia RT Cores for ray tracing and Tensor Cores for AI acceleration, to train a tiny neural network live while rendering a dynamic scene, it is explained. Subsequently, the neural net learns how light is distributed throughout the scene, and is capable of evaluating "over a billion global illumination queries per second when running on an Nvidia GeForce RTX 3090 GPU". Referencing back to the video, embedded above, this allows the tiger model in the scene to feature dense fur with rich lighting detail at interactive frame rates.

LOD problem

Nvidia also mentions that it has been working on the level of detail (LOD) problem. It gives an example of how a tree is made up of complex detailed objects like leaves, branches and bark but unless you are very close to or zoomed into the tree, this LOD won't be useful, it would be very inefficient to render. Its researchers have devised a new approach that "generates simplified models automatically based on an inverse rendering method," says the Nvidia blog. It further explains that this technique allows creators to "generate simplified models that are optimized to appear indistinguishable from the originals, but with drastic reductions in their geometric complexity."

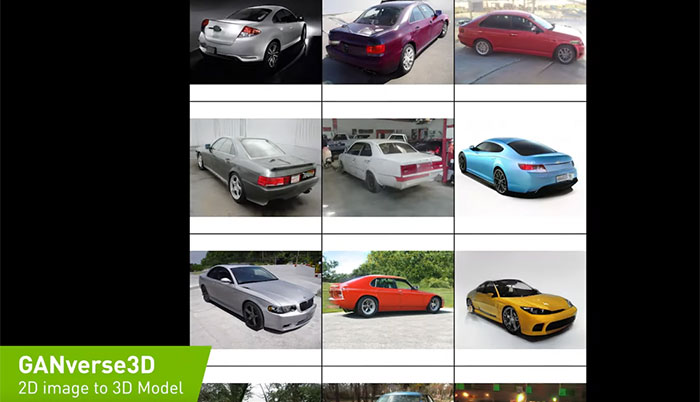

In the Nvidia graphics research highlights video (somewhere above) you will also see various efficient and intelligent object rendering techniques including those based on Generative Adversarial Networks (GANs) which we have covered previously in the HEXUS news.