Nvidia has published a blog post describing how deep learning technology "makes Turing's graphics scream". Interestingly, the post talks specifically about how Deep Learning Super Sampling, or DLSS, provides a "leap forward" in graphics processing on Turing as it runs on the GPU's Tensor cores, easing the burden on the CUDA cores. DLSS effectively replaces TAA (Temporal Anti-Aliasing) and, additionally, it is claimed to deliver better quality.

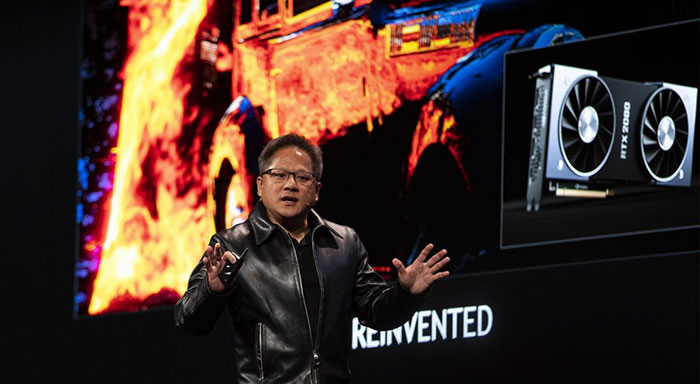

The blog post makes reference to Nvidia CEO Jensen Huang's speech at GTC in Europe last week.

"If we can create a neural network architecture and an AI that can infer and can imagine certain types of pixels, we can run that on our 114 teraflops of Tensor Cores, and as a result increase performance while generating beautiful images. Well, we've done so with Turing with computer graphics."

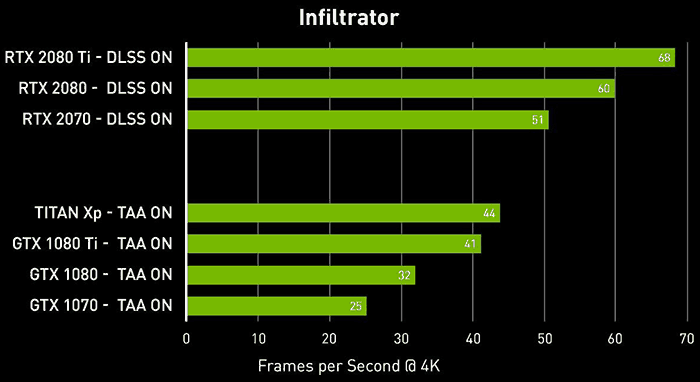

In DLSS some pixels are generated by the shaders but others are 'imagined' via the AI. A comparitive performance chart that Nvidia hopes demonstrates the breakthough was shared, as below.

Commenting upon the chart, the Nvidia CEO boasted "In each and every series, the Turing GPU is twice the performance". However, that statement doesn't quite line up with the numbers.It would have been useful for the chart to include FPS measurements of the new RTX cards running TAA, and sans-AA type smoothing in these 4K demos - but that would have made the chart quite a bit bigger and less impactful for Huang's point.

According to the smallprint, the 4K benchmarks were taken with max settings dialled in and all GameWorks effects enabled. The rest of the PC system was configured with a Core i9-7900X 3.3GHz CPU, with 16GB Corsair DDR4 memory, Windows 10 (v1803) 64-bit, and the v416.25 Nvidia drivers.