Nvidia's big announcement at Gamescom this year is trio of GeForce RTX 20-series graphics cards. A lot has been made of their forward-looking features that will offer far more impressive reflections through raytracing and improved anti-aliasing performance via intelligent AI, amongst other benefits.

We already know that Turing, the architecture underpinning RTX 20-series, will combine traditional rasterisation (SM cores) with raytracing (RT cores) and AI (Tensor cores) for best-in-class image quality, but the question on most enthusiasts' lips is just how fast these cards are going to be in present games.

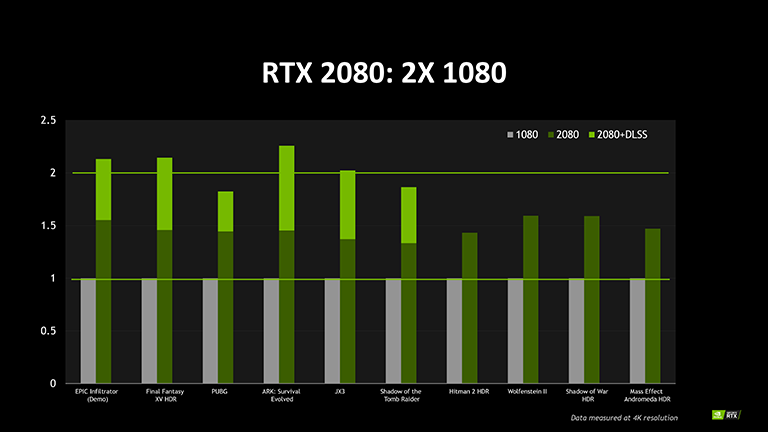

Nvidia has previously alluded to RTX 20-series being fundamentally better than the incumbent 10-series for pure rasterisation - in other words, for the games you play today - and now we can shed some light by sharing a performance slide from a presentation that backs up this assertion.

Performance slide provided by Nvidia

Comparing the GeForce RTX 2080 against the GeForce GTX 1080 at a 4K resolution - a model-to-model comparison more than a price-to-price one - we see the new GPU is up to 50 per cent faster in today's games when benchmarked at the same image-quality settings... without activating the machine-learning-based DLSS anti-aliasing, where it is said to be 2x faster than the GTX 1080 running traditional TAA.

That's a goodly amount. Understanding how it achieves this performance uptick requires an appreciation of the additional efficiencies present in the Turing SM block, (think of how the Volta architecture works), plus just more shaders and memory bandwidth - 2,944 vs. 2,560 and 448GB/s vs. 320GB/s, respectively.

Interestingly, that purported performance increase ought to put it a shade or two above a GeForce GTX 1080 Ti.

There you go. Finally, some hard-and-fast numbers on what makes RTX 2080, and the rest of the RTX line, a better gaming GPU than its predecessor. The numbers are better than a high-level perusal of the spec sheet would suggest.

Of course, it is always prudent to treat manufacturer-provided numbers with caution; they will inevitably produce a best-case scenario to illustrate generational performance uplift, and our own upcoming testing out to separate the wheat from the chaff.

Is a potential 50 per cent enough of a performance hike in your opinion, especially when one factors in the matching 50 per cent pricing hike? We look forward to hearing your thoughts. Fire away.