Introduction

Nvidia is on a mission to improve PC graphics by encouraging the industry to adopt technologies such as ray tracing and machine-learning-based image enhancement. To this end, the GeForce RTX series of cards has dedicated hardware - RT and Tensor cores - to accelerate the computationally-heavy work involved in producing more realistic-looking images. Available in RTX 2080 Ti, 2080, 2070, and 2060 forms, each model combines these features with an all-new architecture known as Turing.

It's fair to say that GeForce RTX has got off to a stumbling start, one certainly not helped by a significant delay in games engines using Nvidia's technology. Yet there is cause for optimism in the green camp as a few parts of the jigsaw fall into place, including the clearing of older 10-series GPUs from the channel, games launching with RTX-specific technology in tow, and rival AMD limited in what it can put forward as a credible high-end rival.

Nvidia does still face a problem or two. The first, ironically, is the RTX 2060, which though a thoroughly decent performer at the £315-£370 price point, is painfully expensive to produce. Why? Because it uses the same massive die - 10.8bn transistors, 445mm² - as the RTX 2070. The GPU's innate horsepower also happens to be barely enough to drive solid performance in titles that do take advantage of its reduced-capacity RT and Tensor tech.

What Nvidia really needs is a specific, custom Turing die, much smaller and power-efficient in size and power, explicitly designed for the premium-mainstream market. One that is bereft of those space-taking RT and Tensor cores. Such a GPU ought to play nicely in the £250 price point, you would think.

As it happens, such a GPU is formally unveiled today. Enter the GeForce GTX 1660 Ti.

GeForce: from GTX to RTX to GTX |

|||||||||

|---|---|---|---|---|---|---|---|---|---|

RTX 2080 Ti |

GTX 1080 Ti |

RTX 2080 |

GTX 1080 |

RTX 2070 |

GTX 1070 |

RTX 2060 |

GTX 1660 Ti |

GTX 1060 |

|

| Launch date | Sep 2018 |

Mar 2017 |

Sep 2018 |

May 2016 |

Oct 2018 |

May 2016 |

Jan 2019 |

Feb 2019 |

May 2016 |

| Codename | TU102 |

GP102 |

TU104 |

GP104 |

TU106 |

GP104 |

TU106 |

TU116 |

GP106 |

| Architecture | Turing |

Pascal |

Turing |

Pascal |

Turing |

Pascal |

Turing |

Turing |

Pascal |

| Process (nm) | 12 |

16 |

12 |

16 |

12 |

16 |

12 |

12 |

16 |

| Transistors (bn) | 18.6 |

12 |

13.6 |

7.2 |

10.8 |

7.2 |

10.8 |

6.6 |

4.4 |

| Die Size (mm²) | 754 |

471 |

545 |

314 |

445 |

314 |

445 |

284 |

200 |

| Base Clock (MHz) | 1,350 |

1,480 |

1,515 |

1,607 |

1,410 |

1,506 |

1,365 |

1,500 |

1,506 |

| Boost Clock (MHz) | 1,545 |

1,582 |

1,710 |

1,733 |

1,620 |

1,683 |

1,680 |

1,770 |

1,708 |

| Founders Edition Clock (MHz) | 1,635 |

- |

1,800 |

- |

1,710 |

- |

1,680 |

- |

1,708 |

| Shaders | 4,352 |

3,584 |

2,944 |

2,560 |

2,304 |

1,920 |

1,920 |

1,536 |

1,280 |

| GFLOPS | 13,448 |

11,340 |

10,068 |

8,873 |

7,465 |

6,463 |

6,221 |

5,437 |

3,855 |

| Founders Edition GFLOPS | 14,231 |

- |

10,598 |

- |

7,880 |

- |

6,221 |

- |

3,855 |

| Tensor Cores | 544 |

- |

368 |

- |

288 |

- |

240 |

- |

- |

| RT Cores | 68 |

- |

46 |

- |

36 |

- |

30 |

- |

- |

| Memory Size | 11GB |

11GB |

8GB |

8GB |

8GB |

8GB |

6GB |

6GB |

6GB |

| Memory Bus | 352-bit |

352-bit |

256-bit |

256-bit |

256-bit |

256-bit |

192-bit |

192-bit |

192-bit |

| Memory Type | GDDR6 |

GDDR5X |

GDDR6 |

GDDR5X |

GDDR6 |

GDDR5 |

GDDR6 |

GDDR6 |

GDDR5 |

| Memory Clock | 14Gbps |

11Gbps |

14Gbps |

10Gbps |

14Gbps |

8Gbps |

14Gbps |

12Gbps |

8Gbps |

| Memory Bandwidth | 616 |

484 |

448 |

320 |

448 |

256 |

336 |

288 |

192 |

| ROPs | 88 |

88 |

64 |

64 |

64 |

64 |

48 |

48 |

48 |

| Texture Units | 272 |

224 |

184 |

160 |

144 |

120 |

120 |

96 |

80 |

| L2 cache (KB) | 5,632 |

2,816 |

4,096 |

2,048 |

4,096 |

2,048 |

3,072 |

1,536 |

1,536 |

| Power Connector | 8-pin + 8-pin |

8-pin + 6-pin |

8-pin + 6-pin |

8-pin |

8-pin |

8-pin |

8-pin |

8-pin |

6-pin |

| TDP (watts) | 250 |

250 |

215 |

180 |

175 |

150 |

160 |

120 |

120 |

| Founders Edition TDP (watts) | 260 |

- |

225 |

- |

185 |

- |

160 |

- |

- |

| Suggested MSRP | $999 |

$699 |

$699 |

$549 |

$499 |

$379 |

$349 |

$279 |

$249 |

| Founders Edition MSRP | $1,199 |

$699 |

$799 |

$699 |

$599 |

$449 |

$349 |

- |

$299 |

What's in a Name?

Let's get the confusing bit out of the way first: the GPU's name. Nvidia believes it is the logical successor to mainstream champs of yore. GTX 1060 and GTX 960 sold in droves, so it would normally have made sense to call this the GeForce GTX 2060. The rather large problem is that RTX 2060 exists, therefore having both RTX 2060 and GTX 2060 coexist, offering different feature-sets, is a marketeer's nightmare. Nvidia could have got away with, say, GTX 2050, but the thinking is that 20-series GPUs need to have aforementioned forward-looking tech intact.

That's the rationale behind the unusual naming scheme, and though we don't like it per se, there's not much else Nvidia can do with the current stack the way it is. The Ti suffix, meanwhile, ensures that a cheaper, non-Ti card is around the corner.

Architecture Composition

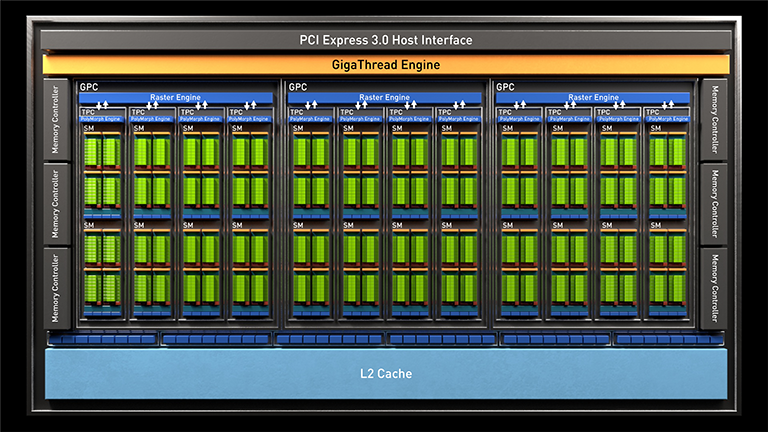

The full-fat TU116 die; much smaller than the RTX blueprint

The TU116 block diagram sheds light on the GeForce GTX 1660 Ti's architecture provenance. Composed of three GPCs each holding 8 SMs, which in turn hold 64 single-precision Cuda cores, there's 1,536 shaders on tap. Each SM also holds four texture-units (96 in sum). On the back end reside 48 ROPs connected to a 192-bit (6 x 32-bit) memory controller interfacing with GDDR6 memory. The design is what we'd expect from a $250-ish card for 2019.

It's key to understand that Nvidia has fundamentally redesigned each SM for this class of GPU, compared to the enthusiast RTX models, which is a first as far as we can tell. Remember that each RTX's SM has baked-in RT and Tensor cores. These are removed this time around - not just dormant - and it is one reason why the RTX 2060 is a whopping 57 per cent bigger than GTX 1660 Ti. In order to save more space and power, Nvidia also chops the buffering L2 cache in half - 1,536KB vs. 3,072KB - believing that streamlining the architecture reduces the need for space-hogging cache.

Even so, the TU116 feels a bit big given its 12nm process. 2016's GeForce GTX 1070 based on older Pascal technology is not much larger and ought to benchmark at least as well as GTX 1660 Ti. The machinations of silicon, eh.

Speeds and Feeds

Keeping to a leaner design enables Nvidia to increase the base and boost frequencies to 1,500MHz and 1,770MHz, respectively, helping ameliorate the shader deficit to RTX 2060. Still, GTX 1660 Ti faces a 12.6 per cent FP32 TFLOPS shortfall. Meanwhile, though GTX 1660 Ti has the same 6GB framebuffer and 192-bit interface, memory speed drops from 14Gbps to 12Gbps.

The upshot, in pure rasterisation terms, is that GTX 1660 Ti ought to benchmark at around 85 per cent of the RTX 2060's levels. Is that enough to justify the $279 (£259) price point attributed to the cheapest partner cards, especially given that they don't feature the much-publicised RT or Tensor tech? That's a tricky one. We'd really like to see the GTX 1660 Ti come in at $249, properly differentiating GTX and RTX.

Nvidia is keener, of course, to extol the virtues of GTX 1660 Ti vs. Pascal-based GTX 1060 and, looking further back, GTX 960. Compared to its 10-series cousin, the new GPU is significantly more powerful from a pure specification viewpoint. That only tells half the story, mind, as the Turing architecture, clock for clock, offers further meaty speed-ups thanks to concurrent integer and floating-point execution, an improved cache and shared-memory setup, and an extra 50 per cent memory bandwidth. So it should, as the wildly popular GTX 1060 is nearing its third birthday.

The sum of these changes benefits games on a case-by-case basis - some prefer concurrent INT and FP execution (Shadow of the Tomb Raider), others are more agreeable to the unified cache (COD: BLOPS 4). Nvidia says these architecture improvements make more sense for how modern games engines have evolved, citing a widening performance gap between GTX 1060 and GTX 1660 Ti as time goes on.

Given its positioning, GeForce GTX 1660 Ti escapes the usual Founders Edition treatment, sadly, so it's up to partners to come up with their own designs on the 120W TDP GPU. Segueing nicely, let's take a look at such a partner card.