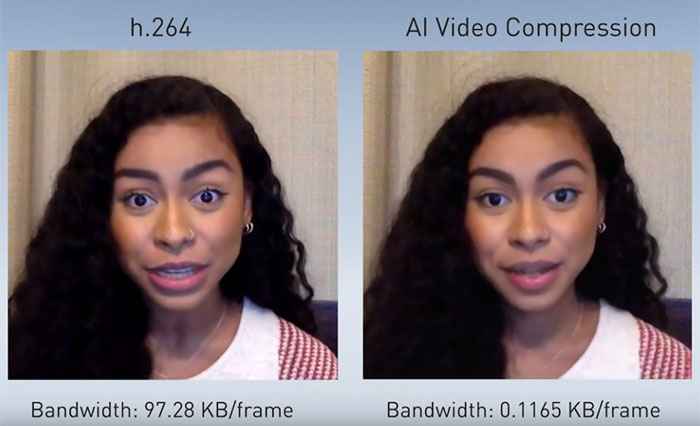

Nvidia researchers have invented a technique they are calling AI Video Compression. The research was revealed at GTC 2020 last week, where during a Q&A session Nvidia CEO Jensen Huang admitted there would be a shortage of GeForce Ampere graphics cards until 2021.

AI Video Conferencing can save users / networks / ISPs a lot of data if widely implemented. Nvidia says that in the current Covid-19 inspired WFH era there are >10 million video conferencing streams live at any given time. Imagine that data being slashed by 10x.

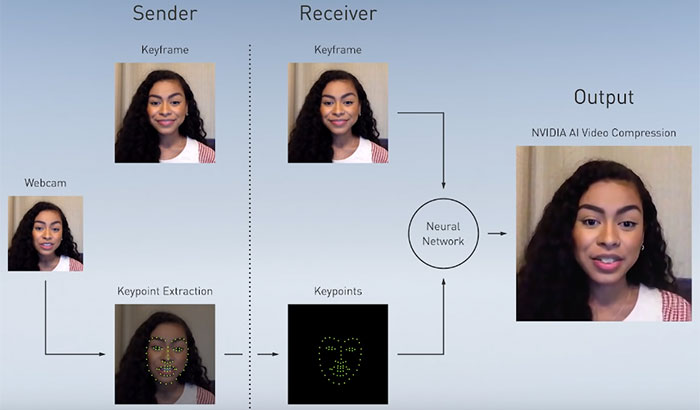

The AI Video Conferencing technology replaces a traditional codec with a neural network. How it works is sketched out in its simplest terms in the diagram above. You can see that like a traditional video it starts with a keyframe pixel image. However from then on it only transmits keypoints of the face for eye/face/mouse/head movement as data. On the receiver end a Neural Network (using a generative adversarial network – GAN) reconstructs your video conference partner's face.

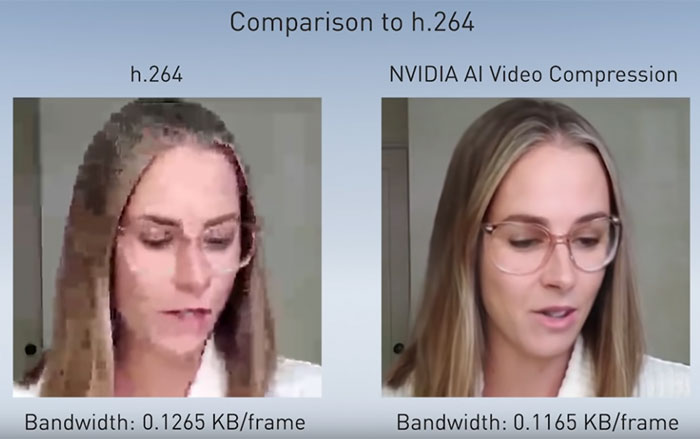

Nvidia researchers provided another comparison to illustrate the usefulness and quality of AI Video Conferencing. In the above example screenshot you can see that the h.264 cdec has been constrained to very low bandwidth, approximately the same as the AI technique uses. The result is that h.264 video is visibly pixelated / smeared – generally low quality.

The above technique can be applied whether the subjects are wearing masks, headphones etc. Other applications are to manipulate the caller's face so it is looking straight at the camera, or to swap the person's face to an animated character.

Nvidia AI Video Conferencing is just one part of the Nvidia Maxine Cloud-AI Video Streaming Platform. It also includes; AI-based super-resolution, Noise removal, Conversational AI translations, and Face Re-animation in real-time.