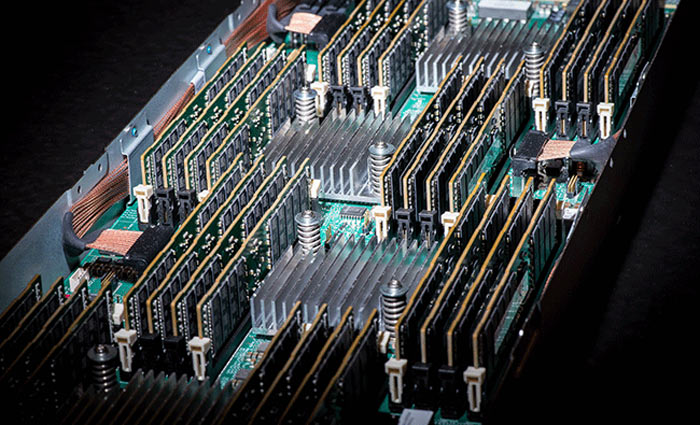

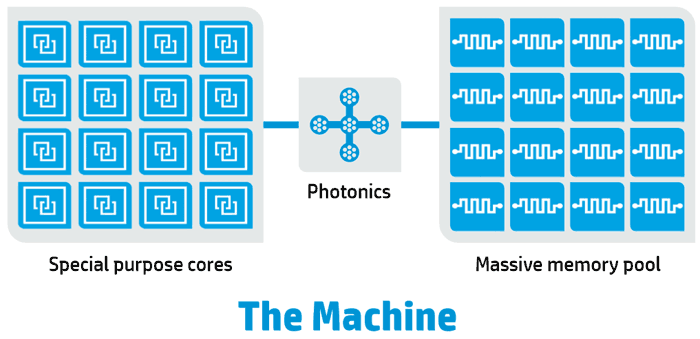

Hewlett Packard Enterprise (HPE) has successfully demonstrated a Memory-Driven Computing prototype. The proof of concept computer, developed as part of The Machine research program, "puts memory, not processing, at the centre of the computing platform to realise performance and efficiency gains not possible today". HPE brought the prototype online in October and have been testing the system and software since that time.

With the predicted deluge of data from connected devices, expected to total greater than 20bn by 2020, HPE reckons a complete new computer architecture is needed. Apparently the volume of data produced by users and their devices is growing faster than the ability to process, store, manage and secure it with existing computing architectures. That is the impetus behind HPE's research into The Machine.

The public demonstration of a Memory-Driven Computing prototype is a significant milestone. "With this prototype, we have demonstrated the potential of Memory-Driven Computing and also opened the door to immediate innovation. Our customers and the industry as a whole can expect to benefit from these advancements as we continue our pursuit of game-changing technologies," said Antonio Neri, EVP & GM of the Enterprise Group at HPE.

In the demonstration all the essential building blocks of the new architecture worked together as expected. The following were demonstrated:

- Compute nodes accessing a shared pool of Fabric-Attached Memory;

- An optimized Linux-based operating system (OS) running on a customized System on a Chip (SOC);

- Photonics/Optical communication links, including the new X1 photonics module, are online and operational; and

- New software programming tools designed to take advantage of abundant persistent memory.

8,000x improved execution speeds

In the pre-prototype stages of The Machine project it was predicted that Memory-Driven Computing architectures could improve computer calculations speeds "by multiple orders of magnitude". Testing the prototype has confirmed these expectations, with new software specially written to make the most of the large amount of persistent memory available improving execution speeds in a variety of workloads by as much as 8,000x.

HPE will continue to work on The Machine and will focus its use in exascale HPC fields. The architecture is said to be "incredibly scalable" so will fit nicely with high-performance compute and data intensive workloads, including big data analytics. So even though you aren't likely to replace your PC with anything derived from this research in the near future, you will be able to benefit from it making the services you connect to faster and more efficient.

It is worth being aware that commercialisation of HPE's Memory-Driven Computing technology will rely on a number of upcoming cutting edge technologies such as; true byte-addressable NVM, photonics/optics fabric-attached memory, ecosystem enablement, and new secure memory interconnect technology.