The US National Energy Research Scientific Computing Centre (NERSC) has revealed some further details about its upcoming Perlmutter supercomputer. Named in honour of Saul Perlmutter, an astrophysicist at Berkeley Lab who shared the 2011 Nobel Prize in Physics, the supercomputer is based upon the Cray Shasta platform which is a heterogeneous system comprising both CPU-only and GPU-accelerated nodes. Once in action, Perlmutter will deliver performance of 3-4 times Cori, NERSC's current platform.

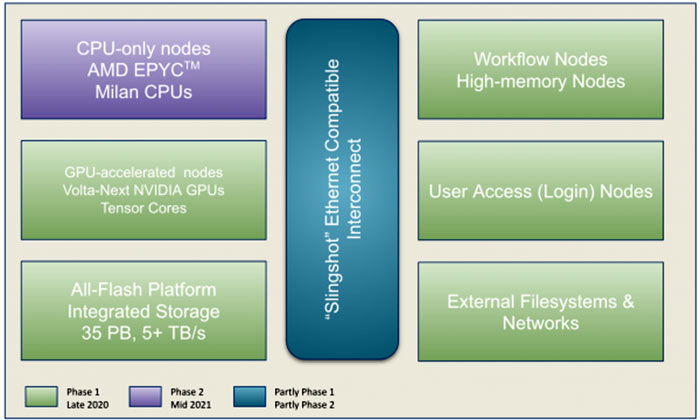

A finalised contract for the design, announced yesterday, provides some up to date specs for what to expect by the time the system gets switched on and is fully operational. Perlmutter will be activated in two phases.

The first phase will consist of 12 GPU-accelerated cabinets and 35PB of all-flash storage. The NERSC says that each node will have four of the next generation (Volta-Next) Nvidia GPUs, along with 256GB of memory, for a total of over 6,000 GPUs. Additionally each Phase 1 node will feature a single AMD Milan CPU. Phase 1 will be delivered in late 2020.

Phase 2 will expand the system by adding CPU-only nodes. Each of these nodes will feature 2 AMD Milan CPUs with 512GB of memory. In total the system will then contain over 3,000 CPU-only nodes. This second phase will be ready for action by mid-2021.

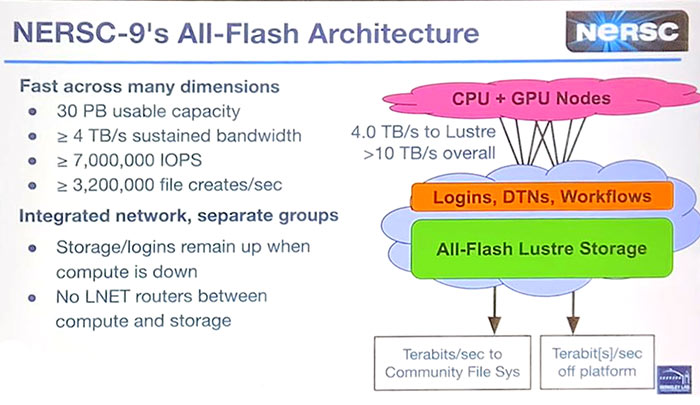

Another aspect of the Perlmutter design worth highlighting is that it is NERSC's first supercomputer with an all-flash scratch filesystem (35PB capacity). This Cray-developed Lustre filesystem will move data at a rate of more than 5 terabytes/sec.

Interestingly a 300ft crane was on site late last year, used to install the cooling infrastructure to support the Perlmutter supercomputer.

NERSC and Nvidia are collaborating on software tools for Perlmutter's GPU processors, testing early versions on the existing Cori's Volta GPUs. The "innovative" tools are bullet pointed and outlined on the NERSC blog. Sudip Dosanjh, NERSC Director, summed up that "we look forward to Perlmutter delivering a highly capable user resource for workloads in simulation, learning and data analysis".