During the pandemic people have been more reliant on video conferencing technology than ever before. This communications technology has become more prevalent than ever - moving beyond remote office meetings and chats with relatives in far-flung places. My granddaughter, for example, has been regularly taking part in school classes remotely, and even her Irish dancing classes moved to Zoom. I've also read about live-streamed weddings and other major life events, as well as watched a few entertainment shows where the large audience is virtual.

While there has been a lot of high profile work on video image compression codecs - sometimes these codecs are even advertised on stickers on AV equipment – it seems that audio codecs are somehow less glamorous and develop more slowly. The Google AI Blog notes that video image codecs are now very advanced and efficient meaning the imagery is sometimes lower bit rate than the accompanying audio. That doesn't seem right and Google is seeking to address this imbalance by putting efforts into developing a leaner and better voice codec for applications that can aid communication over the slowest networks around.

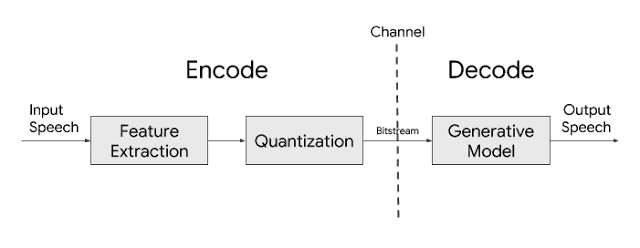

Lyra combines technologies from traditional codec design with ML techniques. Google has tuned Lyra using thousands of hours of speech to provide the clearest possible audio communications at extremely low bit rates. It says it has managed to move past traditional parametric codecs like MELP largely thanks to new natural sounding generative models trained on human speech and optimized to avoid robotic / unnatural speech output.

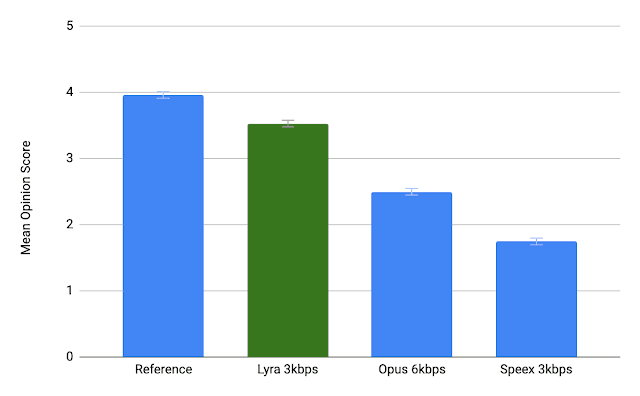

To hear how Lyra stacks up to its closest competitor, Opus (most widely used at 32kbps), you can hear the differences in the videos below. Some more audio-only samples are provided on the Google AI Blog, one set recorded in an environment with heavy background noise.

Lyra isn't just for English speech, the thousands of hours of ML included 70 languages. Furthermore, Google has tested and verified the audio quality with professional and crowdsourced listeners.

If you are wondering when applications might start making use of codecs like Lyra, the answer is quite pleasing – Google Duo chat is already rolling out with Lyra and will use it on very low bandwidth connections. Google suggests that paired with a video codec like AV1 it is possible to have video chats on connections as slow as 56kbps. Meanwhile, Google continues to optimize Lyra with further ML time and investigations into acceleration via GPUs and TPUs. At the time of writing Lyra isn't suited to much more than regular speech, but it might be able to be trained for music and general applications in the future.