Introduction and Architecture

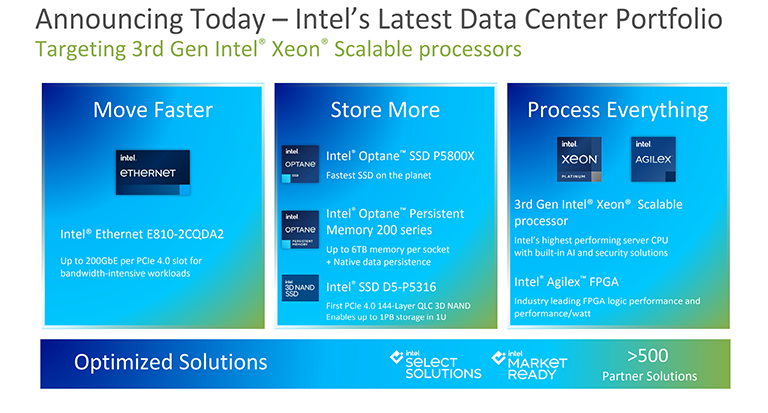

Intel is today announcing a significant update to its datacentre portfolio. Built on the pillars of moving data faster, storing more and processing everything, there are new SSDs, Optane Memory, Ethernet, FPGAs and, of course, Xeon CPUs.

The very fact that focus is not wholly on a new slew of 3rd Generations Xeons is telling in itself. Intel sees the portfolio's hardware launches as synergistic - more than the sum of the parts - and Xeon CPUs have only an equal standing in this line of thinking.

Yet Intel launching a host of Xeon processors armed with a raft of improvements is a big deal in this segment, particularly as the release comes hot on the heels of AMD launching its fastest-ever 3rd Gen Epyc processors. We can finally compare one company's best against the other.

It's interesting that Intel rarely talks about Xeons in isolation; it's all about the platform. Makes some sense, too, as providers require optimised solutions to eclectic needs, but whilst various other accelerators wax and wane in popularity, CPUs remain the workhorse of the server infrastructure.

Intel says it has shipped over 50m Xeons that cover markets such as Cloud, 5G, Edge, Enterprise and HPC. There is no one-fits-all Xeon for this very reason, with Intel having models specifically for media processing, networking, virtualisiation environments. There are models used in single-, dual-, four- or eight-socket boards, from eight cores to 40, span architectures, and pricing between $555 and $13,012. It's resolutely complicated, but so are the disparate target environments.

Underpinning these 57 Xeon processors is a common architecture and platform, so let's delve progressively deeper into the technical aspects of the latest 3rd Generation Xeons.

Intel has released Xeons for more than 20 years and typically uses a common architecture across server and desktop (Core) products. In more recent times, simplifying a lot, 1st Generation Xeon Scalable are based on the Skylake-SP architecture from 2017, 2nd Generation Xeon Scalable uses an optimised Skylake-derived microarchitecture known as Cascade Lake (2019) for 1-2S systems.

Making matters convoluted, Intel actually launched the first tranche of 3rd Gen Xeon Scalable processors last year. Codenamed Cooper Lake, they were built using the guts of Cascade Lake, albeit with some minor improvements, and designed for the niche market servicing 4- and 8-socket systems.

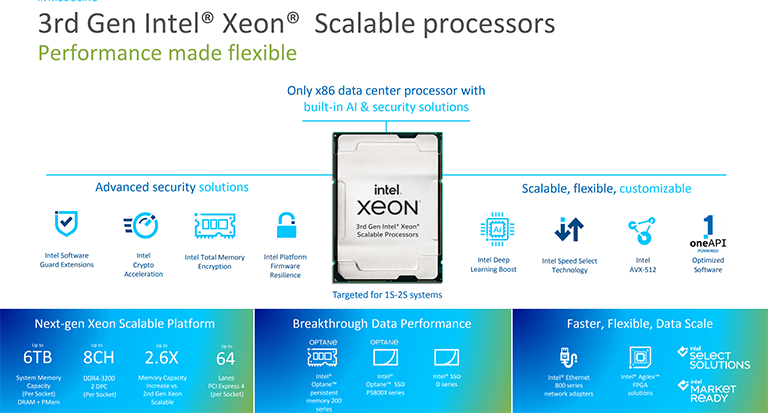

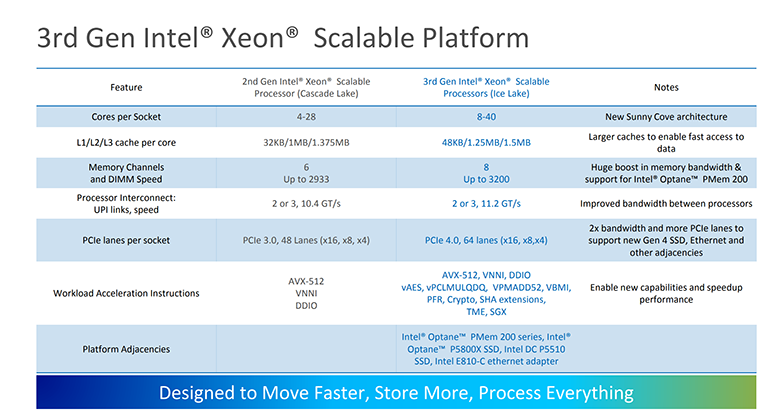

Today's 3rd Generation Intel Xeon Scalable, however, is not an update; it's an architecture revamp. Designed for 1S-2S systems and using the same physical LGA 4189 socket as Cooper Lake, the CPU cores are based on 10nm Ice Lake technology whilst the platform expands the feature-set by offering an AMD-matching eight DDR4-3200 memory channels, faster processor-to-processor interconnects, PCIe 4.0, and a number of new instructions benefitting AI processing, security and cryptography.

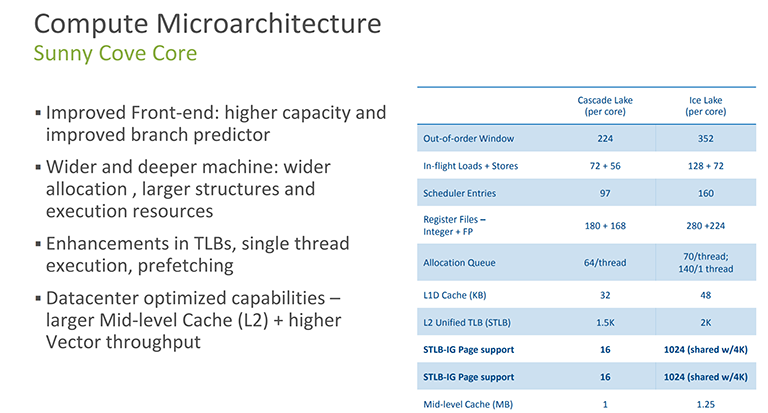

Let's go one stage deeper. Ice Lake may well be familiar to readers as the microarchitecture has already debuted on mobile and desktop chips. The Sunny Cove cores are found in last week's desktop 11th Gen Core processors, so one can expect a 20 per cent IPC improvement over the base Skylake core used in Cascade Lake.

That said, it is important to understand that Intel is not using its best microarchitecture here. The Tiger Lake platform, as seen on mobile, is superior as it uses Willow Cove cores. They having still-larger caches, higher frequencies, and faster memory support. Intel will skip these cores and jump straight on to the successor Golden Cove cores for the next iteration of Xeon coming later this year. Why? Timing.

It's no secret that Intel's manufacturing woes have permeated through every segment of the hardware business. Ice Lake-based Xeons were supposed to be available at least a year ago, to better combat the Epyc threat and provide customers with an earlier step-change in performance. This release in April 2021 can be construed as potentially problematic as it may come reasonably close to those next-generation Sapphire Rapids-based Xeons built on a new socket. How do you convince large-volume customers to opt for this infrastructure that has no obvious upgrade path? Being fair, do understand that microarchitecture decisions are made years in the past, typically at least three, so Intel shuffling the pack is more from manufacturing necessity than far-out design thinking.

Back on track. This Sunny Cove core is not a perfect facsimile of the desktop version. Intel knows that datacentre workloads are typically different insofar as their code footprint is larger and tend to rely more on front-end efficiency than desktop. To help matters along, the Xeon implementation's L2 cache is 1.25MB per core, not 512KB on Rocket Lake desktop, and there are myriad minor tweaks that don't show up on a spec sheet readily but make a cumulative difference in the right environment. In other words, an eight-core 11th Gen Core chip is not (exactly) the same as an 8-core Xeon. We'd go so far as to say it fits between regular Sunny Cove and Willow Cove in the Intel pecking order.

Another key distinction is in process. Whilst Intel backported these cores to a 14nm process on desktop, having per-socket compute density is far more important on server. This is precisely why 3rd Gen Xeon Scalable uses the latest 10nm process, and it enables Intel to push the per-socket capabilities to 40C80T, up from 28C56T on the last generation. Rival AMD, of course, has been running 64C128T Epycs for two generations now, yet it's hard to ignore Intel's 43 per cent leap this time around. On paper, these chips are more of a play for customers who need bags of compute performance above all else.

More cores, memory channels, and higher IPC are obvious drivers of straight-up compute performance. Intel, however, is keen to be seen as the prime adopter of certain technologies that, it says, customers really want. You may recall that we lamented the use of die-inflating AVX-512 on the desktop iteration. On server, they make far more sense as applications can be tuned for them, and they are instrumental in propelling cryptography (read security) and AI inferencing performance to a higher plane. AVX-512 is a catch-all term also applied to the previous generation. With many more subsets catering to security and cryptography here, Ice Lake has more all-inclusive support than Cascade or Cooper Lake.

The additional ISA improvement argument is an interesting one. AMD, who doesn't have AVX-512 this generation, will counter that any customer truly reliant on AI processing or cryptography-side security will invest in an FPGA or add-in board dedicated to that task, enabling processor offload and arguably better performance. Intel, on the other hand, says having these specific acceleration instructions in its latest Xeons allow them to be more than an old-school compute pony, and present another way for companies to enhance the TCO metric if leveraging otherwise underutilised capacity - features implemented through analysis of emerging workloads and customer feedback.

Presently available on Xeon E processors, Intel is bringing Software Guard Extensions (SGX) to this family, too. It works by using hardware-based memory encryption that isolates specific application code and data in memory, much in the vein of AMD's Secure Encrypted Virtualisation (SEV), though if past performance is anything to go by, the performance overhead on Intel is larger than on AMD. The size of the SGX-protected portion of memory differs between models and is known as an enclave.