Nvidia has published a blog post to tell of developments in AI and Deep Learning discussed at the Conference and Workshop on Neural Information Processing Systems 2017 - or NIPS for short. The graphics chip maker said that it had two papers accepted to the conference this year, and contributed to two others. Nvidia has over 120 people working on associated AI tech, and plenty of resources, so it's not surprising it can field so strongly.

At this year's NIPS a major trend is "the rise of unsupervised learning and generative modelling". It is this technology which is behind our Nvidia headline today. A paper entitled 'Unsupervised Image-to-Image Translation Networks,' led by Nvidia researcher Ming-Yu Liu shows some startling AI generated scenes which yield a kind of 'imagination'.

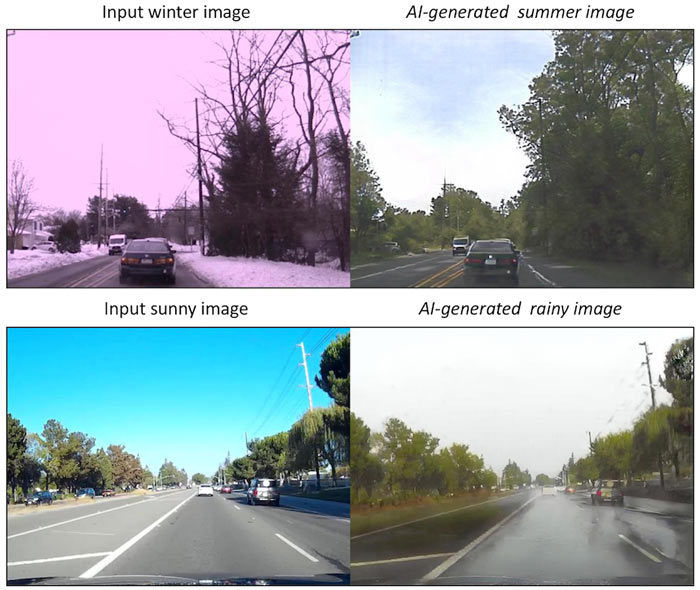

Liu leveraged AI and generative modelling tech to transform winter scenes to summer, from day to night, and from sunny to rainy days. This isn't just a few stills we are talking about, Liu used the technology to transform video inputs.

Explaining how the scene transformations were done, Nvidia said that the system uses "a pair of generative adversarial networks (GANs) with a shared latent space assumption to obtain these stunning results". Furthermore, the scene transforms are done with unsupervised learning which means less time / manpower is needed for tasks such as capturing and labelling data and variables for the AI deep learning systems.

The above tech isn't just useful for transforming scenes quickly and realistically "far ahead of anything seen before." Nvidia reckons the technology will be useful for self-driving cars, for example. Training data can be captured once and then simulated across a variety of virtual conditions: sunny, cloudy, snowy, rainy, night time, etc to cover all visual bases for the automobiles in the wild.

You can read more about this new technology on the Nvidia Blog, and on the dedicated research page.