AMD's biggest GPU making rivals, namely Nvidia and Intel, are known to be working on chiplet-based GPU designs. Intel in particular has been very up front with raising awareness of what it calls Multi-Tile GPUs, for example check out this Xe family photo from June last year. AMD has effectively popularised chiplet CPU designs with its Zen series and one might wonder why the same approach to GPUs hasn't been taken by the firm as yet. Some answers may be found in the recently unearthed patent 'GPU Chiplets using High Bandwidth Crosslink' and the patent number 'US 2020/0409859 A1' (PDF link).

Primary chiplet

The patent documentation indicates that AMD's exploration of GPU chiplet designs has been slowed by it foreseeing two major hurdles to success – complexity and expense. Because of these concerns it is has kept its GPU designs monolithic so far, though as GPUs get bigger this is creating its own set of drawbacks. Now AMD's researchers and engineers are starting to see a path through the multi-chip GPU brambles, hence this new patent.

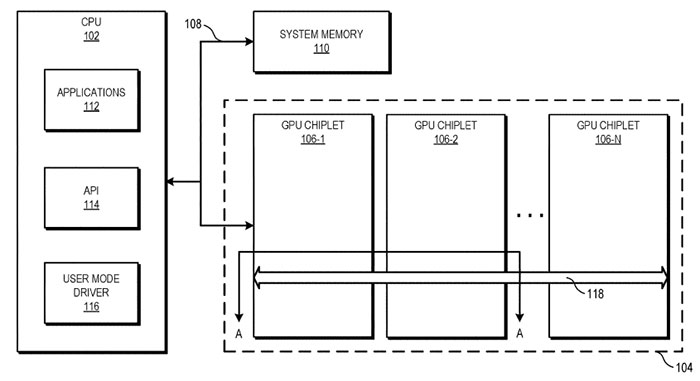

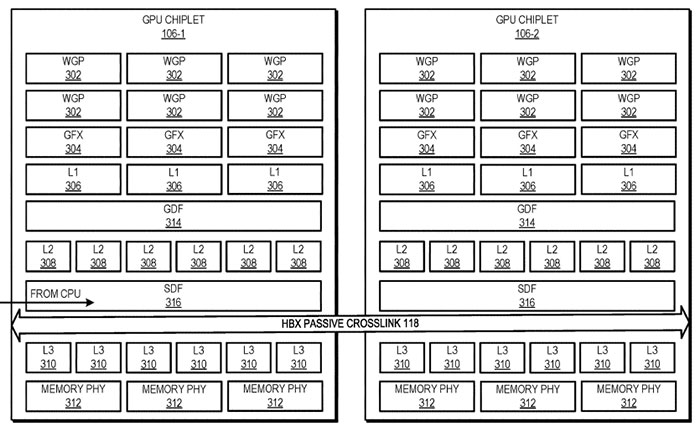

Multiple chiplets with HBX shown as connecting component 118 above

In the patent there is described a 'primary chiplet' which works alongside a driver layer to make the CPU believe it is still communicating with a monolithic GPU – this subterfuge should smooth any architectural transition process. The primary chiplet does some other tasks too – like intelligently distributing the computing load.

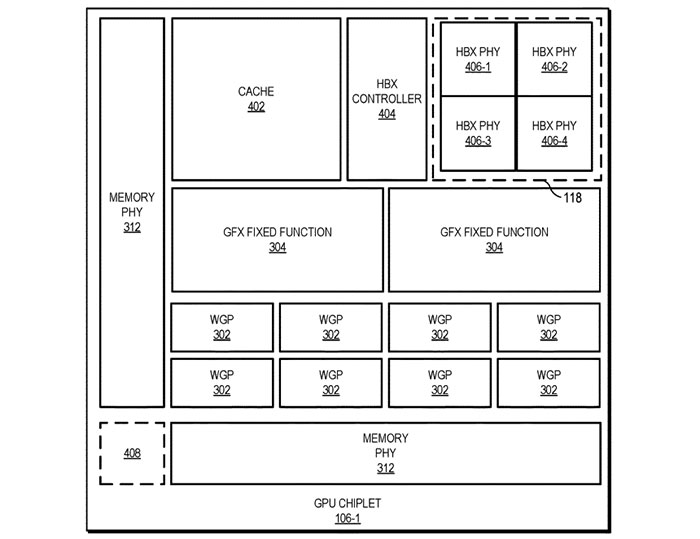

HBX position in more detail

An important part of the patent appears to be a passive interface called a High Bandwidth Crosslink (HBX). According to the patent this connects the L3 caches of all the chiplets so that they can access the cache data and VRAM accessible by all the other chiplets. Moreover, the diagrams indicate that a chiplet contains both L2 and L3 caches, similar to RDNA 2. Whether this means that the chiplet design is destined to a mainstream/gaming GPU remains to be seen. The patent shows dual chiplets connected along a line, as well as greater multiples in a star shape.