While PC gamers and enthusiasts are on tenterhooks waiting for the first RDNA 2 graphics card releases from AMD, the firm is steadily ploughing a furrow in HPC and is showing off its latest wares for this market at the SC20 virtual tradeshow. Yesterday it took the wraps off the AMD Instinct MI100 Accelerator with the claim that it is "the world’s fastest HPC GPU accelerator for scientific workloads".

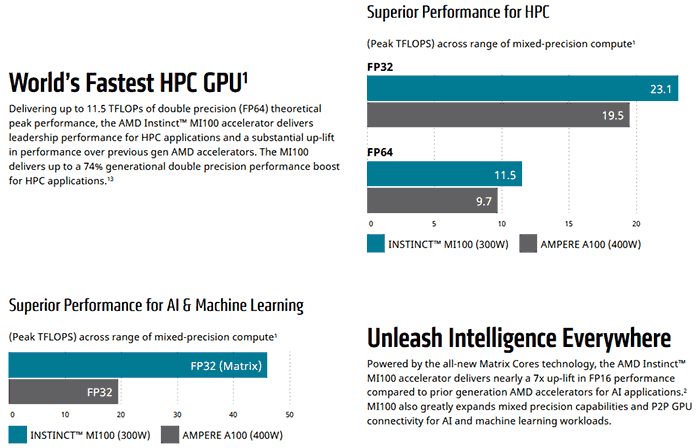

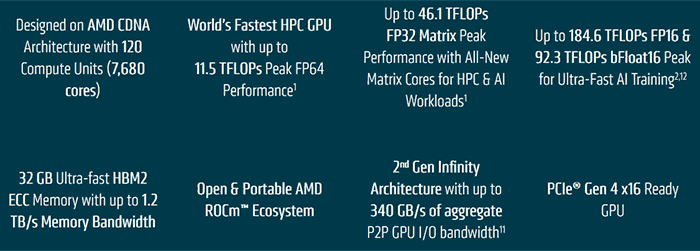

AMD says the Instinct MI100 is its first to use its new CDNA architecture which was built from the ground up to provide "a giant leap in compute and interconnect performance". Compared to previous AMD HPC accelerators the new MI100 offers a nearly 3.5x (FP32 Matrix) performance boost for HPC and a nearly 7x (FP16) performance boost for AI throughput, according to in-house testing.

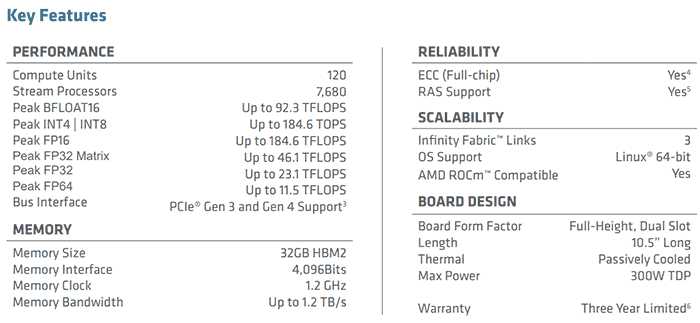

Key technologies behind the MI100 GPU include; an all-new Matrix Core Technology with superior performance for Machine Learning, AMD Infinity Fabric Link Technology for 64GB/s CPU to GPU bandwidth and up to 276GB/s of peer-to-peer (P2P) bandwidth performance, PCIe Gen 4.0, and Ultra-Fast HBM2 memory - to deliver up to 11.5TFLOPS peak FP64 performance (or 23.1 TFLOPS peak FP32 performance).

AMD highlights the virtues of combining its award winning 2nd Gen Epyc processors with MI100 accelerators to deliver true heterogeneous compute capabilities for HPC and AI. It says that if you combine these two key innovative solutions and the open and portable AMD ROCm programming ecosystem the biggest challenges in HPC and AI can be tackled.

With regard to the usage of AMD's Instinct MI100 accelerator cards, the famous Oak Ridge Leadership Computing Facility has already been testing these GPUs as it was provided early access. Facility director Bronson Messer says that the MI100 has proved to deliver "significant performance boosts, up to 2-3x compared to other GPUs," in test platforms. In addition one also must factor in the energy efficiency savings for MI100.