Back at the CES in January we saw Nvidia launch its first 'in-car supercomputer', the DRIVE PX2. Partners such as Audi, BMW, Ford, Mercedes-Benz and Volvo lined up were already lined up to use the roadworthy AI system. At the same time we learnt about the supporting Nvidia DriveWorks software and DRIVENET deep neural net technologies.

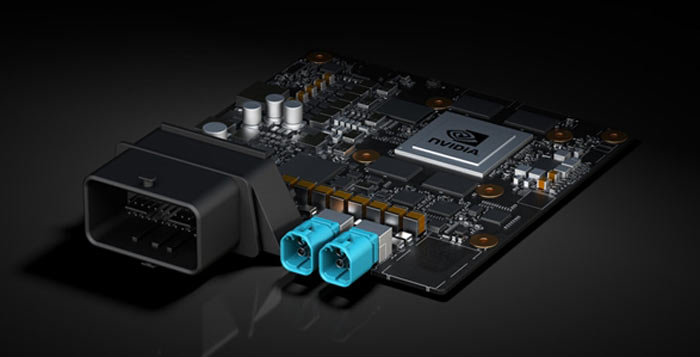

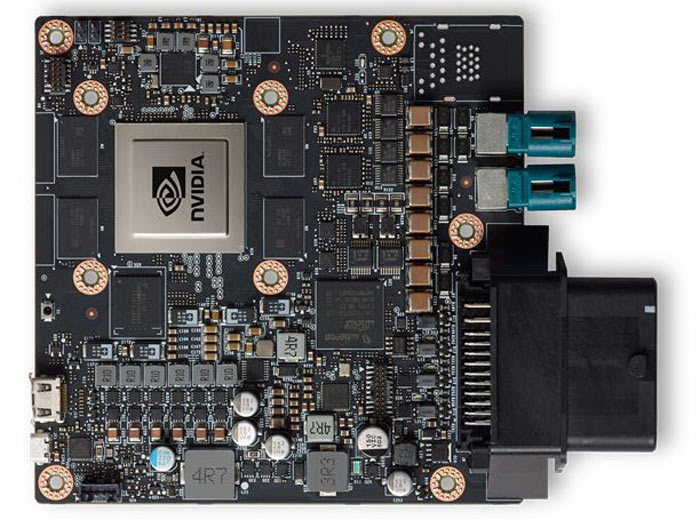

Now Nvidia has unveiled a new DRIVE PX 2 for auto makers, pictured above. The new in-car computer is palm-sized and energy efficient but offers enough processing power for automated and autonomous vehicles to use for driving and mapping. Consuming just 10 watts, the new single processor DRIVE PX 2 is targeted at providing AutoCruise functions, "which include highway automated driving and HD mapping".

Nvidia revealed that the new DRIVE PX 2 will be the AI engine of the Baidu self-driving car. Vice president and general manager of Automotive at Nvidia, Rob Csongor, said that "Bringing an AI computer to the car in a small, efficient form factor is the goal of many automakers". He added that the DRIVE PX 2 "solves this challenge for our OEM and tier 1 partners, and complements our data center solution for mapping and training".

The introduction of the new DRIVE PX 2 demonstrates the platform's scalability and it is now available from a single mobile processor configuration (as above), to a combination of two mobile processors and two discrete GPUs, to multiple DRIVE PX 2s. The single processor version is suitable for AutoCruise for the highway, and you can scale up to enable greater automation such as "AutoChauffeur for point to point travel," all the way to a fully autonomous vehicle.

Nvidia says that while the single processor DRIVE PX 2 relies on just one Parker system-on-chip (SoC) it can process inputs from multiple cameras, plus lidar, radar and ultrasonic sensors. This new DRIVE PX 2 option will start to become available to partners in Q4 this year.

Tesla P4, P40 Accelerators Deliver 45x Faster AI

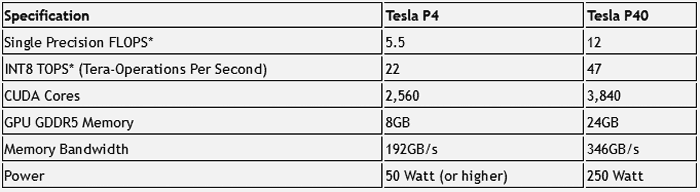

In other news out of GTC China today, Nvidia has unveiled its new Tesla P4 and P40 GPU accelerators, and new software, to accelerate AI. It is explained that "the Tesla P4 and P40 are specifically designed for inferencing, which uses trained deep neural networks to recognize speech, images or text in response to queries from users and devices".

The new Pascal-based Tesla P4 and P40 accelerators have been built featuring special inferencing instructions to accelerate such operations up to 45x compared to CPUs, and up to 4x over GPU solutions that are just a year old.

The Tesla P4, available from November, is a high efficiency part which can consume as little as 50W, "helping make it 40x more energy efficient than CPUs for inferencing in production workloads". Meanwhile the Tesla P40, available from October, delivers maximum throughput for deep learning workloads, delivering up to 47 tera-operations per second (TOPS).