A peek at performance, thoughts

Bear in mind that using 24 drives powered by 16-thread CPUs makes little sense when a single worker is providing minimal load to the server. The point here is that a 2U box like this can serve many more requests than a home PC run with a single drive. It is outside the remit of this technical evaluation to examine heavily-loaded performance, particularly as we have little else to compare it to, so let's focus on straight-line speed.

If you're looking for silly-high numbers from the off, ATTO provides 4GB/s writes when using a small-sized file. Tweaking the settings enables read and writes to exceed 3GB/s on larger transfers. SiSoft SANDRA, similarly, shows stupendous potential DRAM-like performance.

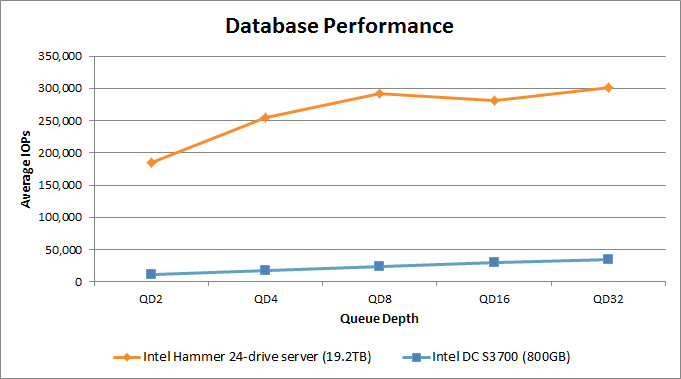

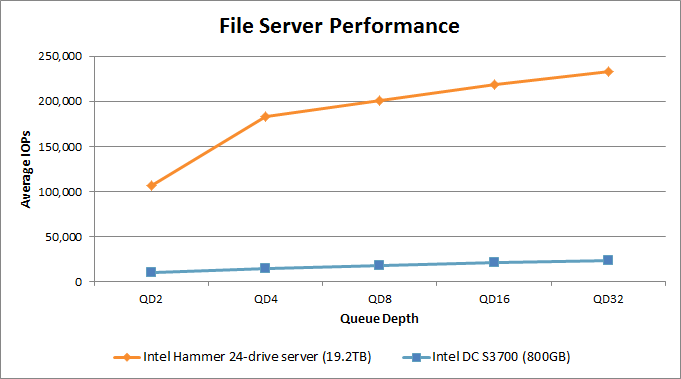

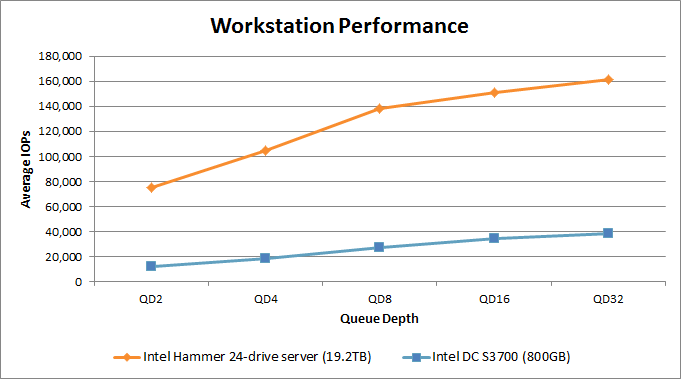

We can also take a look at industry-standard Iometer performance based on preset parameters for database, file server and workstation profiles.

Simulating a possible real-world scenario, our database workload consists of random 8KB transfers in a 67 per cent read/33 per cent write distribution. A single DC S3700's performance is dwarfed by the 24-drive server. Impressive as 300,000 IOPS are, we had expected it to be higher. Perhaps Windows Server 2008 R2 and the LSI/Intel controllers aren't as good bedfellows as they could be.

The file server workload consists of mixed-size file transfers. There's a hefty increase in performance - evidenced by what appears to be 'flatline' results for a single drive - with the 24-drive's IOPS consistently hitting 250,000 when queue depth is increased.

More of the same in the workstation test. High IOPS means excellent responsiveness to a large number of requests. We believe it's possible to obtain higher numbers than those shown by adjusting the RAID setup and using a different operating system.

Thoughts

Our first brief look at a storage server shows just how much capacity can be crammed into a 2U rack-mounted chassis. The 800GB Intel DC S3700 SSD may seem expensive on first glance, at £1,500 a pop, but it's considerably cheaper than, say, the SAS drives that regularly populate this space.

The investigation into performance of high-capacity servers is an interesting and relevant area, more so as the demands of cloud computing and storage increase. We'll be taking a closer look at subsequent servers using different SSDs and operating systems - variations of Linux - in the near future. For now, we'd like to thank Hammer, Intel and Kingston for showcasing how the Intel DC S3700 SSD fits into the enterprise space.