Focussing on storage

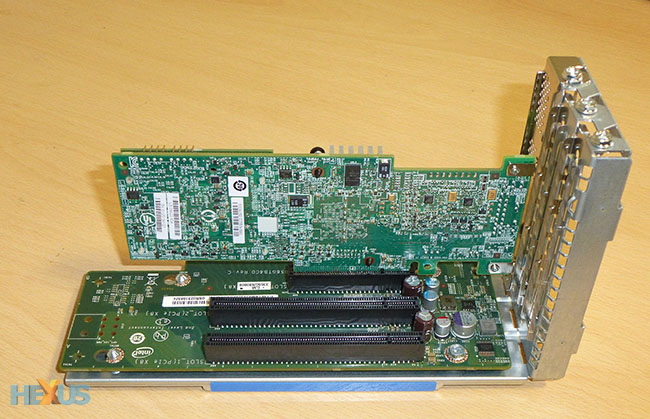

The server board has a total of 48 PCIe Gen 3 lanes that can be accessed through the two brown-coloured riser slots. Two riser-cards slot into these and each offers a trio of x8 PCIe Gen 3 slots into which a dedicated graphics card or, more pertinently, RAID storage card(s) can be installed.

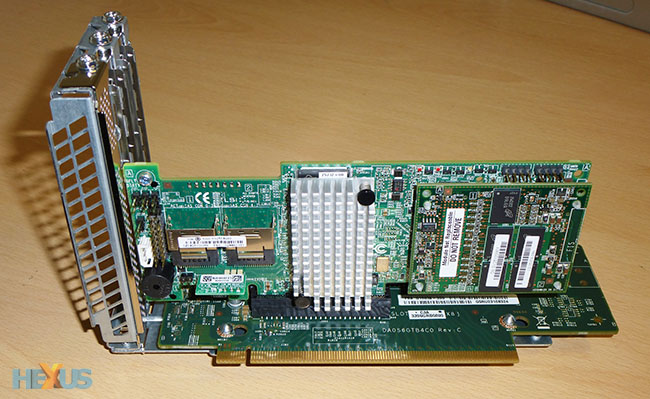

Here's one of the riser cards with a single Intel/LSI RS25DB080 RAID controller in situ, connected via that x8 PCIe interface. Powered by a dual-core LSI SAS2208 ROC chip and supported by an embedded 1GB cache (the daughtercard at the front), the two mini-SAS connectors hook-up to four SAS or SATA SSDs, or eight per controller. The onboard chip supports a wealth of RAID modes, focussing on redundancy as much as on pure performance.

A single RAID card on one riser and two on the other means that a total of six mini-SAS cables snake away to the front of the chassis and into enclosures that each access four drives.

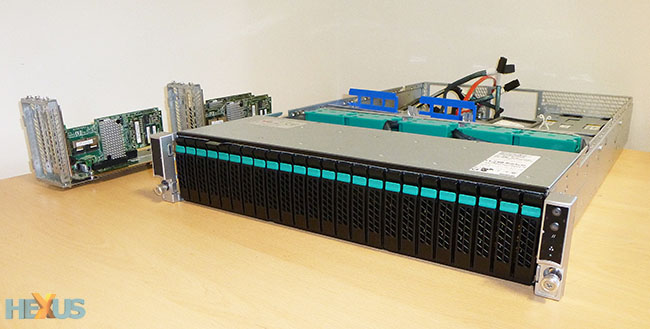

And here are those 24 hot-swappable bays. This type of storage server historically held mechanical drives, but such has been the advancement of solid-state drives, particularly with respect to capacity, reliability and consistency, that it's now possible, and even advisable, to use high-quality SSDs for ultra-responsive performance.

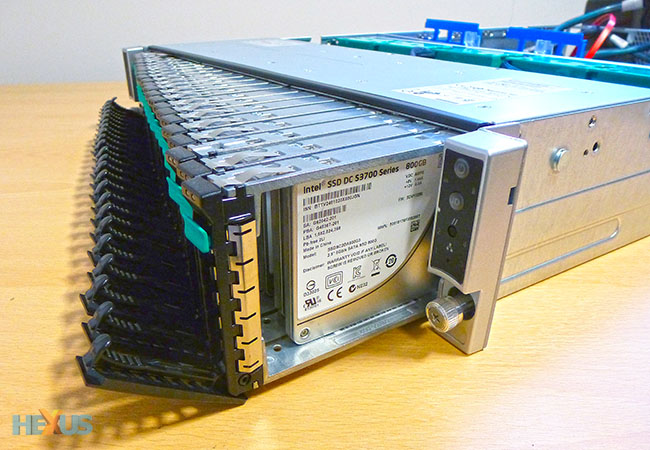

Coming back to the Intel DC S3700 drives we mentioned at the beginning of this technical evaluation, 24 of those are pre-fitted into the front-end.

You're looking at around £37,000 of SSDs here. Add the rest of the supporting cast together - server, board, memory, CPUs, RAID cards, etc. - and there isn't much change from £45,000. Intel understandably keeps it all in-house. The server only works with a particular motherboard and specific Xeon class of chips - E5 in this case. It could be argued there's little need to spend over 80 per cent of the budget on SSDs alone, but hey, if you've got it, flaunt it.

Driving the beast

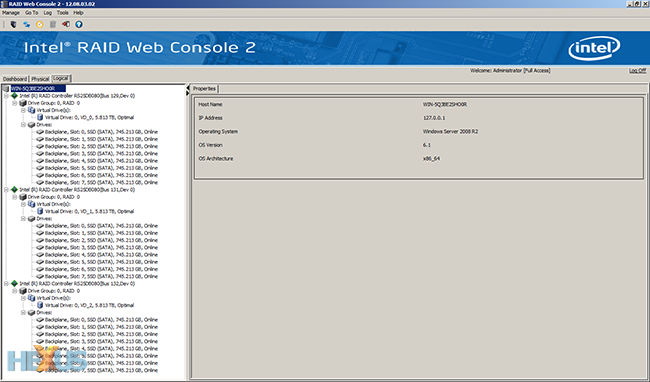

The choice of operating system defines potential performance to a large degree. Our Hammer-built server was pre-loaded with Windows Server 2008 R2, but there's no reason - and some very good performance-related reasons - to use variants of the Linux distribution. Yet this is more a technical examination of the environment an Intel DC S3700 would be used in, so we kept to the default operating system.

The ear-splitting sound when switching it on reminds us this is a rack-mountable unit destined for a data-centre environment, not a small office. The server's noise profile can be regulated through the BIOS, and once set to 'low-noise' mode and after the initial POST sequence, quietens down to an acceptable hum. For those interested, it pulls around 160W when idling, rising to 280W when all drives are being accessed.

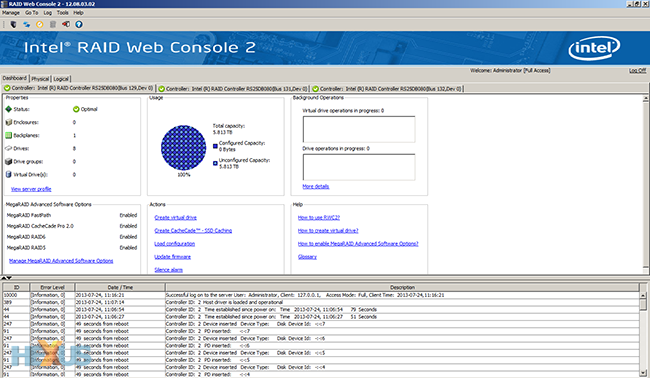

Basic storage configuration takes place through Intel's RAID Web Console 2. The three RAID controllers, each connecting to eight DC S3700 drives, are identified separately.

There are countless RAID options available with, as expected, focus on security - RAID 5/6 - than on straight-line speed. Interestingly, the SSDs can be used as a front-end cache for regular mechanical drives. Using LSI's CacheCade software and configurable through the console, we reckon a mix of DC S3700s and 2.5in, 10K drives would work well.

Most administrators wouldn't run a storage server without any form of redundancy. For giggles, however, we chose to run RAID0 for maximum throughput on a stripe size of 64KB. Once formatted, 17.4TB is shown in Windows.