Raytracing A Reality?

Arguably the most talked about feature of the Turing architecture is its ability to generate realistic lighting via ray tracing. Traditional rasterisation - output from the shader-core. - has been evolving to mimic accurate lighting. In some games it's pretty good, but it's all a clever hack, where multiple light sources can cause accurate lighting havoc. Pre-baked lightmaps can do a reasonable job of mimicry, but it's expensive from an artist point of view. Similarly, techniques such as screen-space reflections can't, well, reflect anything that is not in the scene. GPU architects have tried to solve the super-accurate lighting riddle for a long time.

The way to do it if you have unlimited computational budget is to ray trace the entire scene, where thousands of light rays are used per pixel and the simulated end result looks practically real because the colour of each pixel is determined by the path the light ray has taken through the scene. If it's reflected off one surface, then going through a semi-transparent object, the final pixel colour next to a vase will be a very specific shade. Ray tracing enables you to calculate this particular shade accurately, and that is why reflections and overall IQ looks so good. Hollywood studios often use variants of ray tracing - path tracing is the gold standard - for hyper-realistic lighting effects, but running algorithms that track the multiple interactions of ray tracing is unheard-of in the real-time computer graphics world.

This is why Nvidia is taking the first steps into bringing ray tracing to the PC gamer. You see, Turing has up to 72 RT cores - one per SM - that when run in conjunction with RTX software can help process the algorithms necessary for lifelike ray tracing. Nvidia understands that a few specialised cores cannot ray trace an entire scene; even Hollywood studios, with their dedicated server farms, take hours to render a single scene.

Rather, Turing is employing these RT cores in concert with traditional rasterisation. With this approach, says Nvidia, 'rasterisation is used where it is most effective, and ray tracing is used where it provides the most visual benefit vs. rasterization, such as rendering reflections, refractions, and shadows'. Makes sense, and this hybrid approach will be the way forward for the foreseeable future.

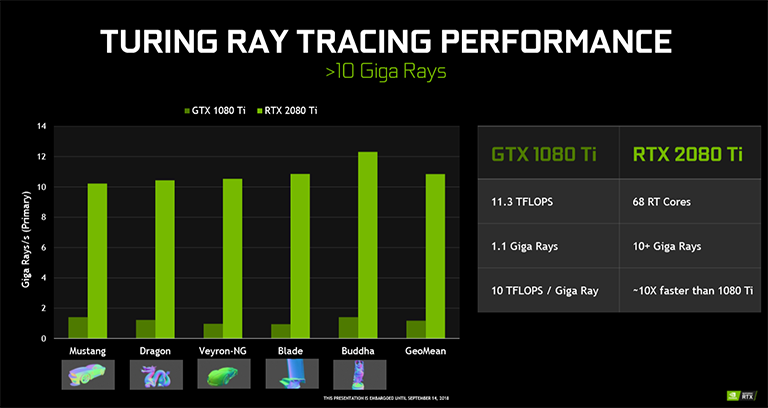

You don't actually need dedicated hardware to run the more basic version of ray tracing that's called ray casting; it's just lots slower if you don't have it. The top-spec Turing card is about 9x faster than GeForce GTX 1080 Ti - this is where the Giga Rays figure are derived from. Even such a speed-up isn't enough to have lots of rays per pixel so Turing uses a technique known as advanced denoise filtering to minimise the number required.

The way ray casting works is as follows: an algorithm tracks multiple rays through each pixel on a scene. The key is to determine whether the ray hits a triangle, and if it does, depending upon distance from viewer, the colour of that triangle (also called a primitive) is calculated. The ray may continue and bounce around the scene for some time, hitting other triangles and therefore changing the final colour of the pixel. It is then rasterisation that converts triangles into shaded pixels.

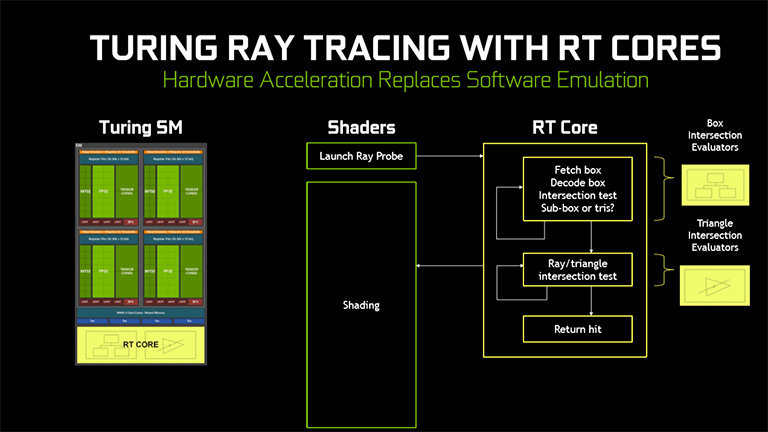

But you can't just fire out even a small number of rays in completely random fashion hoping they will hit a triangle and therefore be considered when determining the final colour of the screen-space pixel. Doing so is a waste of resources. You need to know that it will hit a triangle before shading, so rather than go with a scatter gun approach across the whole scene, a method known as bounding volume hierarchy (BVH) is used to break the scene into ever-smaller boxes until you know for sure a triangle is inside one of them. The following ray/triangle intersection test is pivotal to efficient ray casting and is the joint job of the BVH algorithm and RT cores

On Pascal, the BVH algorithm is run on the shader-core and is relatively inefficient, taking up thousands of software instruction slots per ray. This job is left to the RT core in Turing. It carries two specialist cores whose jobs are to look into ever-smaller BVH boxes (core 1) and, once found, calculate the ray-triangle intersection (core 2). This is how you glean the right colour for the pixel, and this is how images look so good because the colour is not an approximation; it is inferred from the light ray, just like your eye works. When Nvidia says Turing is 9x faster it's referring to this hardware acceleration as opposed to doing it all on the shader-core.

Even though it is much quicker, developers will naturally limit the use of ray tracing - you cannot fire enough rays into a full scene, determine the triangle intersection(s) and final colour, without huge slowdowns. Devs could use it for materials with high reflectivity - metallic spheres - or in areas where really good shadows are needed. The developer will have to calculate exactly how it is implemented, therefore requiring additional add-in support. There are 13 games that will feature Turing ray tracing support at some point in the near future.

Yet before you get all giddy about the prospect of running ray tracing straight away, you will need to wait for Microsoft to update Windows 10 with DirectXR support, mooted for the October update.

In summary, RT cores appear to be good at calculating where the ray-triangle intersection is, removing the load from the shader, whose job is to launch the ray probe in the first instance and then get some feedback from the RT core with respect to a triangle hit. If there is a hit, then it determines the lighting value (pixel colour) along the ray's full trajectory, shade it appropriately, and write it out. It's a long-winded way of getting photo-realistic imagery.

Got a really complex scene with an incredible amount of reflection? The Turing GPU will still be constricted because it can't handle the workload. It will be interesting to see just how games developers tune their engines to maximise image fidelity versus frame-rate performance.