A Performance Metric, Mesh And Variable-Rate Shading

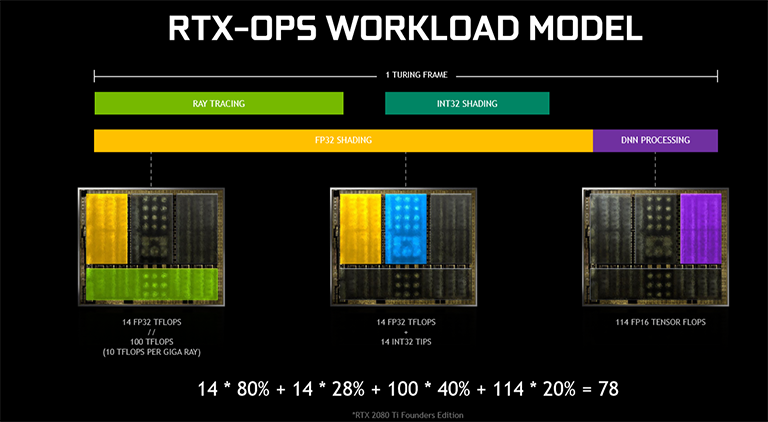

A word or two on understanding peak performance. Understanding that the shading core - both INT32 and FP32 - can work alongside the RT cores for a particular scene, with the Tensor cores offering that quasi-post-processing via DLSS, there's plenty happening in one heavy Turing frame. Exemplifying this is probably the 'best' marketing slide you will see for a while, one that illustrates the total amount of work during one frame cycle.

The calculation is that 80 per cent of the frame uses FP32 shading (11.2 TFLOPS), 28 per cent uses the INT32 (3.92), a further 40 per cent is lit up by the RT cores, and then there's 20 per cent Tensor processing, all adding up to 78 RTX-OPS. Of course, Nvidia is mixing precision to hit this overall metric. We wonder what your thoughts are on such performance projections.

Additional Shading Capability

Mesh Shading

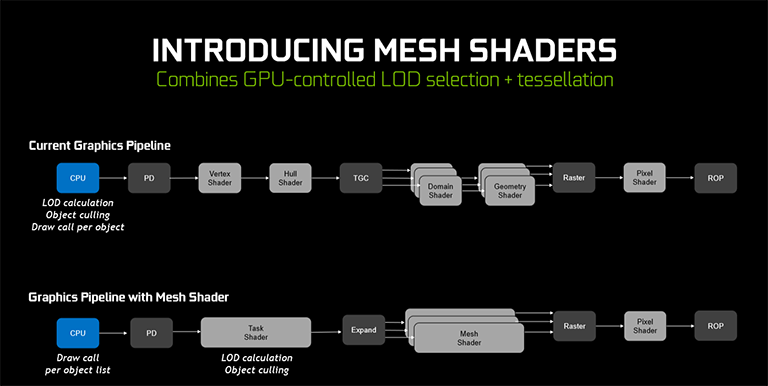

That's not all, folks. Ever heard us say that a scene is CPU limited because that poor workhorse cannot make enough draw calls to satisfy the objects required on screen? What's really happening is that the CPU is setting the scene for the GPU to work on. In open-world areas you can have thousands of objects that all need to be processed by the pipeline. Putting more than, say, a thousand on doesn't give most CPUs enough time - or it doesn't have enough juice, looking at it another way - to issue sufficient draw calls and also calculate the necessary level of detail and whether objects need culling. That's too much work unless the CPU is insanely powerful and running multi-thread-aware DX12 - DX11's MT is woeful.

The all-new Mesh Shader takes a lot of the load off the CPU by moving the LOD calculation - just how much detail each object must have based on its distance from the viewer - and object culling over to a new intermediate step called the Task Shader. It effectively replaces the vertex and hull shaders of the traditional pipeline that are tasked with generating triangles/work.

In simple terms, the Task Shader generates the triangles and the Mesh Shader shades them. Nothing overly new there, as various shaders already exist to do that job, but the key is that the Task Shader can handle multiple objects rather than just one per traditional draw call per CPU. Helpful for games that run older versions of DirectX that are poor at pushing out draw calls? Most likely.

The point is that these two new shaders offer more flexibility than the standard pipeline. It makes most sense for pre-DX12 titles, you would think, but it will be interesting to see it working in practise.

Don't expect to see them on pre-Turing GPUs, either, and the reason for this is the way in which these two shaders interface with the pipeline, as seen above. Running on older hardware, though possible, would require a multi-pass compute shader to be used, negating the benefits entirely.

Variable-rate shading

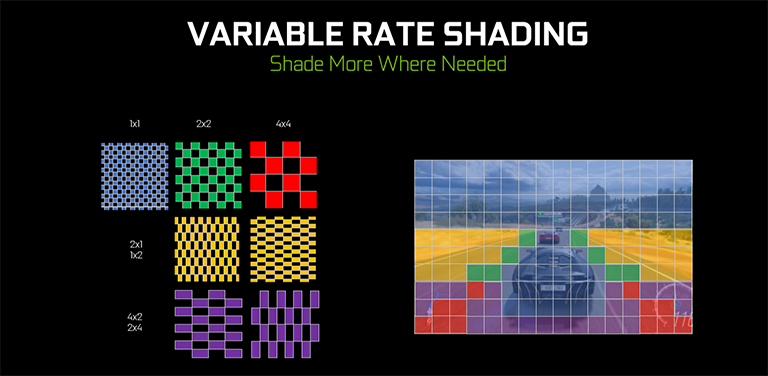

Another feature is variable-rate shading (VRS). Probably most applicable to VR-type workloads that need high resolution and low latency, VRS is the next level to Pascal's SMT, and it allows the GPU to move away from the 1x1 individual shading of every pixel in the scene, which is what you would normally see. Using 16x16-pixel blocks as the default shading size, areas that are not as visually important can be shaded at a lower level, say, once in every four pixels (green section) or once in every eight (yellow). It is up to the developer to determine the levels of shading. It's also important to understand that the total number of pixels are still the same - 4K will have 3,840x2,160 - so there's no reduction in resolution fidelity. What changes is the colour fidelity as fewer pixels are shaded individually.

VRS can be applied in different ways, as well. In Content Adaptive Shading, the required shading rate for the next scene is determined at the end of the current frame. Imagine having a scene where the protagonist is walking towards a building that has mostly the same colour, along with a sky that doesn't change very much. The next frame can use VRS to lower the shading rate for those areas because there's little point in going for pixel-by-pixel shading. However, if there is, say, a flag near the foreground, it will likely be shaded at full rate. Developers need only a small change to their shaders to implement CAS, according to Nvidia.

In another example, Motion Adaptive Shading exploits the way in which LCDs work. As objects' movements are refreshed every 60Hz or so they change location by 'jumping' across pixels rather than being a purely smooth transition. It is your eyes that smooth these jumps into acceptable movement, though it is not perceived at full resolution as our eyes are busy filling in the gaps. VRS uses motion vectors to lower the shading rate; the faster the motion, the lower it can be without incurring a noticeable image-quality difference. So imagine panning quickly across a scene where the sky is mostly one colour - you can really lower the shading rate without obviously hurting quality.

We really need to see VRS in action - Content- and Motion-Adaptive Shading can be used together - to determine just how well it works, the positive impact on maintaining high framerate, but going by a couple of demos during the press day, it appears function adequately.

Given that it appears to be a shading/software operation in the main, you would think it'll work on older hardware. That's not the case, however, as Nvidia has said that enabling variable-rate shading required modification between the shader and raster parts of the pipeline.

What is also not known is how developers will integrate variable shading into games, at what quality levels, and how that will impact upon performance. We wouldn't want to see Turing racing ahead of Pascal in the benchmark stakes by using variable-rate shading alone.