Microsoft has a new 'drawing bot' that can create pictures, pixel by pixel, of objects based upon user description. According to a blog post by Microsoft Research, the new bot has "produced a nearly three-fold boost in image quality compared to the previous state-of-the-art technique for text-to-image generation," using an industry standard test.

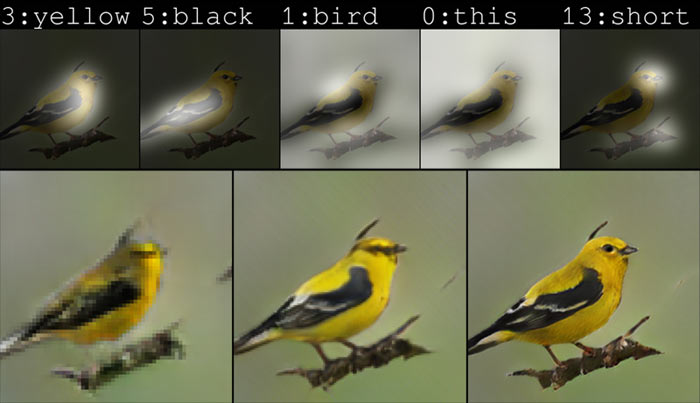

Above you can see a picture of an ordinary looking bird. The image was built up by Microsoft's latest AI from a brief textual description of a yellow bird with black wings and a short beak resting upon a branch. As pictured, there may or may not be such a bird in existence on earth. That might be a rather humdrum example as the researchers say the AI can paint, from scratch, "everything from ordinary pastoral scenes, such as grazing livestock, to the absurd, such as a floating double-decker bus". The AI does in effect use its own imagination when filling in the gaps in a description of a scene.

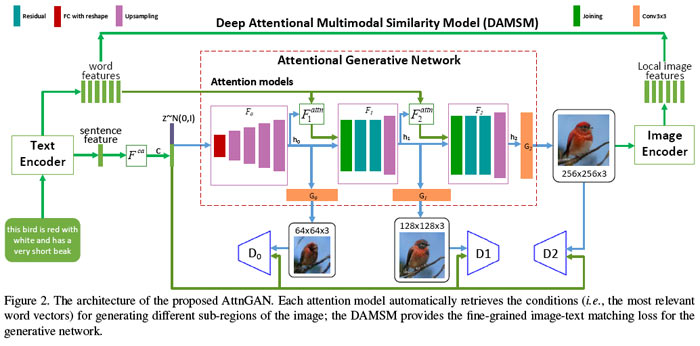

Behind the new AI drawing bot technology is a Generative Adversarial Network, or GAN. In this case the 'adversaries' are two machine learning models; one that generates the imagery from text descriptions, and another that uses text descriptions to judge the authenticity of images. "Working together, the discriminator pushes the generator toward perfection," says the blog.

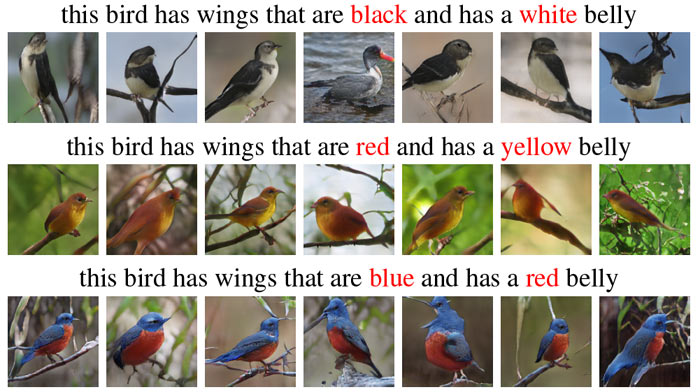

Previous AIs would tend to choke on too much detail, says Microsoft. For example, if told to draw a bird with a green crown, yellow wings and red belly a smudgy image would usually be generated, even though the same previous AI's could do simple bird drawings very well. Microsoft's new attentional GAN, or AttnGAN, is designed to improve drawing accuracy when given more textual detail - it has more focus and 'common sense' which is accrued in its training stages.

Real world applications of the new 'drawing bot' could be as a sketch assistant for painters or interior designers, or it could be a tool for voice-activated photo refinement. With further development time and processing power it is possible that the AI could go on to create animations based upon story board descriptions, reducing a lot of the everyday animation labour in studios.