AMD has launched TrueAudio Next as part of its LiquidVR SDK, open source and available via the firm's Github software repository. This is obviously AMD addressing the same audio issues as targeted by Nvidia's VRWorks Audio. In brief it is a "scalable AMD technology that enables full real-time dynamic physics-based audio acoustics rendering, leveraging the powerful resources of AMD GPU Compute". The tech promises immersive audio to match the visual immersion provided by VR headsets.

For many years audio advancements seem to have stagnated while graphics surged ahead. In a post on the GPU Open blog, entitled The Importance of Audio in VR, Carl Wakeland, a Fellow Design Engineer at AMD, suggests that this is down to the dominance of the 2D screen, positioned a little in front of the user in computing and gaming. Yes, some FPS games and the like might sprinkle a bit of 3D audio into the mix, where it helps the player gauge positions of foes etc, but most of the time 3D audio would be a distraction for anything but some minor background noises. Now the growing popularity of the head-mounted display "changes everything," asserts Wakeland.

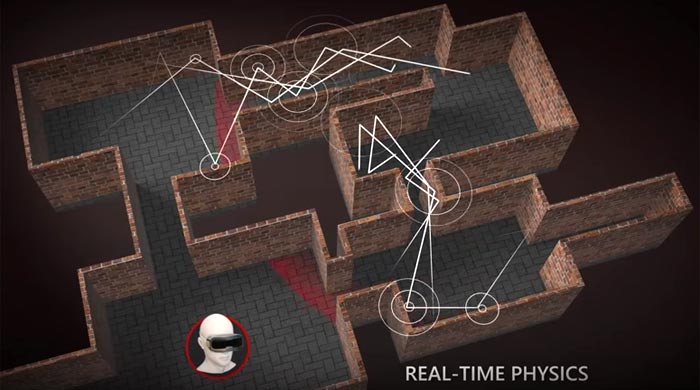

AMD TrueAudio Next is a significant step towards making environmental sound rendering closer to real-world acoustics with the modelling of the physics that propagate sound – AKA auralization. Though this is not perfect modelling, it comes very close, thanks to the power of real-time GPU compute enabled by AMD TrueAudio Next combined with other system resources.

Discussing the computing power required by auralization, Wakeland says that two primary algorithms need to be catered for; time-varying convolution (in the audio processing component) and ray-tracing (in the propagation component). He goes on to explain that "On AMD Radeon GPUs, ray-tracing can be accelerated using AMD’s open-source FireRays library, and time-varying real-time convolution can be accelerated with the AMD TrueAudio Next library." The new AMD TrueAudio Next library is a high-performance, OpenCL-based real-time math acceleration library for audio, with special emphasis on GPU compute acceleration.

Importantly, to ensure smoothness in audio to match the VR display, AMD implements its new 'CU Reservation' feature to reserve some CUs for audio, as necessary, and the use of asynchronous compute.