Test system

For our testing, we used a barebone Intel 4U solution, the S7000FC4UR, along with four Xeon X7350 CPUs and four Xeon L7345s.

Systems such as this are built to form factors such as ATX or EATX form factor used by many two-socket PCs, instead being custom designed for the specific needs of the market place.

Cooling efficiency is of critical importance, with multiple fans, shrouds and dividers used to channel air through the chassis and ensure all the hot-spots have a constant flow of air. Such systems are relied upon to provide constant uptime for mission-critical applications and their failure could have expensive implications for corporations.

They're not used in offices but housed in server rooms, so aren't built to be quiet. During normal operation, the Intel system could be heard from rooms adjacent to our lab, even with the doors shut. Take off the systems cover and the noise shifts right up - a bit like how things might sound if sitting on the wing of a 747 during take-off.

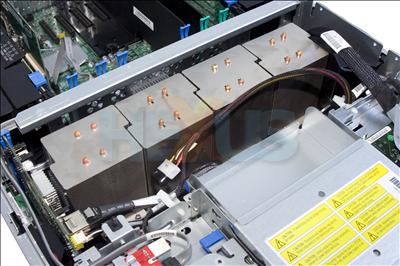

The CPUs are located towards the front of the chassis - just behind the eight-disc, hot-swappable, hard-drive enclosure - and are the first components to receive air from the two front-mounted 120mm fans.

The heatsinks are large and predominantly aluminium, except for the four copper heatpipes used to transfer heat up into the fins. Compared to many desktop heatsinks, they're surprisingly light and rely entirely on the airflow through the system to cool them.

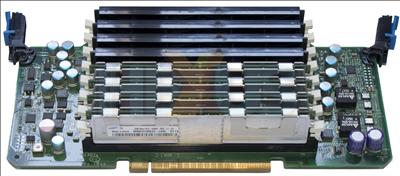

The systems memory is located on risers, two on each side of the chassis, with ducting to funnel the air from front to back.

Each of these memory risers corresponds to a separate memory channel, with eight FB-DIMM slots on each.

In our test system, each riser was outfitted with four 2GB FB-DIMMs running at 667MHz. With all four channels populated identically, this gave us a total of 32GiB of memory.

Slots that didn't have FB-DIMM modules instead contained thermal blankers, to further channel the airflow. Each pair of memory risers also has two 80mm fans installed in series to exhaust heat out the rear of the system.

The Clarksboro chipset is located along the chassis centreline, just behind the processors, and is covered by a large aluminium heatsink.

Clarksboro has a TDP of 47W when used with four memory channels - higher than some processors - so cooling is important. Even so, the aluminium heatsink used, while hardly small, is far less extravagant than the cooling solution found on many enthusiast-orientated desktop boards.

Underneath the heatsink there's a large heatspreader that blocks any further view - but it can safely be assumed that, given the 64MiB Snoop Filter, the chipset die will be fairly large.

Finally down the centre of the system, towards the rear, we find the PCIe expansion slots.

The full complement of seven PCIe 4x slots is provided, along with an I/O riser fitted with a card containing two Gigabit Ethernet ports - allowing four separate Gigabit Ethernet connections when including the two that are built into the mainboard.

All the PCIe slots are 4x mechanically, as well as electrically, so there's no possibility of installing NVIDIA Tesla or AMD Stream Processor GPGPU cards.

Summary

The Intel S7000FC4UR is a well engineered chassis-and-board combination, with careful consideration paid to airflow and maintenance. The only time we had to pick up a screwdriver was to remove the CPU heatsinks - and even then, all four processors could easily be replaced in under ten minutes.

At 4U, though, it's not a particularly small system. In terms of absolute processor density, 1U and blade servers have more potential but the S7000FC4UR offers a lot of flexibility because of the additional components that can be installed.

However, having only 4x PCIe and none that are 8x or 16x does prevent the system being used with AMD or NVIDIA GPGPUs, including the external solutions that NVIDIA offer.