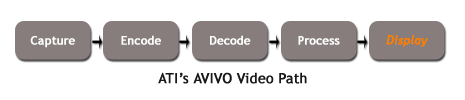

Display: Dithering and the million dollar dual-DVI question

Dither me this

All three of the digital outputs from the display crossbar will hit the dithering engine. Being native 10bpc from the framebuffer out (which somewhat confirms that ATI will support 10:10:10:2 rendertargets in 3D) it also has to support lesser displays that can't natively display that kind of colour depth. Dithering in this case effectively fakes the colour depth available at the highest level on a display that has to be quantised (compress a higher range of values to a lesser one by chopping out high frequency information) to display those colours.Take the following images. Mouseover for the undithered one or click here if that doesn't work.

The different is subtle but noticeable (after all the dithered image has a 5-bit total colour depth!) and that's what Avivo seeks to minimise with the dithering hardware. Avivo employs spacial dithering to - and I'm guessing here - which changes brightness of neighbouring pixels (who knows how big the sample size is, if indeed that's how it works) uniformly to change their colour based on the 10bpc source. The hardware likely uses the spare bits available to encode the intensities needed for spacial dithering to work. The resulting colour is then correct as an average over the neighbouring pixels.

It likely also encodes rounding errors due to the dithering, inside neighbouring pixels. Adding the errors, a fraction of which to the right, some to the lower right, some to the pixel below and the rest to the lower right pixel means you spread out the overall error due to quantising/rounding down across the entire image uniformly, but not so the error is noticeable.

Temporal dithering is also in there and works by averaging colour info for pixels across frames (and therefore over time, hence the temporal nomenclature). "How does your temporal dithering work, Godfrey?". "I'd rather not tell the competition how to make a display controller, if that's alright". Fair enough, my guess'll have to do. The end result is simulated 10bpc output on all digital displays. Seeing that in motion will be the acid test.

Spittin' it oot via yer monitor, aye

Right, the pair of dual-link TMDS transmitters will be on everything from RV515 up. No chopping of the Avivo silicon to create feature-differentiated SKUs, so I'm told. Given that it'll show up from bottom up, the big question is will even the cheapest SKUs get the ports? Of course, barring the low-profile versions there's nothing stopping that happening barring ATI seeding reference designs to AIBs with VGA outputs, or the AIBs being miserly and saving literally cents on the connectors. The transmitters are on the GPU, so no cost there.So, you cheap bastards, give customers of this on-the-face-of-it-great technology the digital connectivity options it mandates. Did I mention that DVI carries analogue? I don't think I did. DVI carries analogue. DVI only on the backplane, analogue convertors in the box. Thanks very much.

Crossfire limitations? Not so much with R5, really

Poke around the web recently and you'll get sniffs of X8-series Crossfire not doing anything over 60Hz at 1600x1200 on analogue outputs, or higher than 1920x1200 at all, because it feeds DVI output from the slave into the compositing engine on the master. That's pretty much correct.Crossfire with R5-series GPUs suffers no such limitation given the dual-link outputs for DVI. Enough said.

Display Points

The big bits from Display? That'll be the 10bpc from start to finish, the 10bpc simulation via dithering on digital outputs, the 10bpc spat out over analogue too and the dual dual-link TMDS transmitters and all the joys they bring.ATI claim the most flexible output connectivity in consumer graphics hardware and, should it all turn out this way, they'll be perfectly right. I'll have a go at summing the lot up now, since you suffered all of that lot and got this far.