Architecture

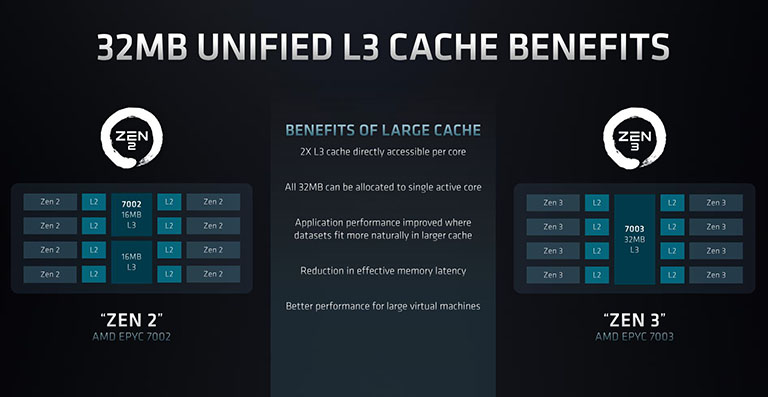

Part of Zen 3's remit is reorganising memory caching for better performance. Long-time readers will know that Zen groups a number of processor cores alongside a set amount of L1 and L2 cache. Zen 2 operates by bundling four cores into what is termed a CCX, and each one connects to 16MB of specific L3 cache. Two CCXes combine to build an eight-core CCD, which is what you see on the left-hand side. Though each CCD carries a total 32MB of L3, if a Zen 2 core in the upper CCX needs to access the contents of L3 cache in the lower CCX, it needs to traverse Infinity Fabric, introducing greater latency than grabbing the content from its attached L3.

Zen 3, meanwhile, has a simpler, better arrangement. The cores, L1, L2 and L3 per CCD don't change. However, each core has access to a full 32MB of L3 in its own complex without having to meddle with bridging over CCXes. It may seem trivial to the casual observer, but it's a greater boon for the server space as each Zen 3 CCD is better equipped to handle virtualised environments that benefit from larger on-chip space for working datasets.

Helping achieve lower latency and improve memory performance further, Milan chips now feature synchronous clock speeds between the Infinity Fabric and memory controller. For example, Epyc 7003 Series is able to run the IF at 1,600MHz when the IMC is set to 3,200MHz; Rome-based Epycs, on the other hand, can only run their IF at 1,467MHz at the same settings. Though it may feel minor, this feature works well in applications that are mostly memory intensive - HPC software comes to mind.

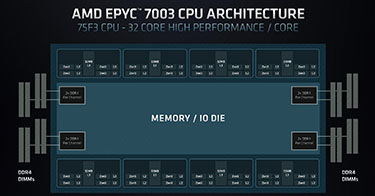

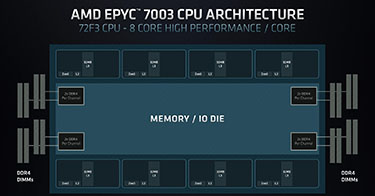

The modular nature of Epyc is characterised by the ability to choose caching and core counts to match desired workloads. For example, it is possible to construct a 32-core Epyc with 256MB of L3 cache - four active cores per CCD, plus the full 32MB of L3 - which is good for high-performance computing and relational databases. On the other hand, per the 72F3, AMD can use a single core per CCD and build an eight-core chip with the same 256MB of L3, useful if fitting a large dataset into cache is more important than the number of cores.

Models

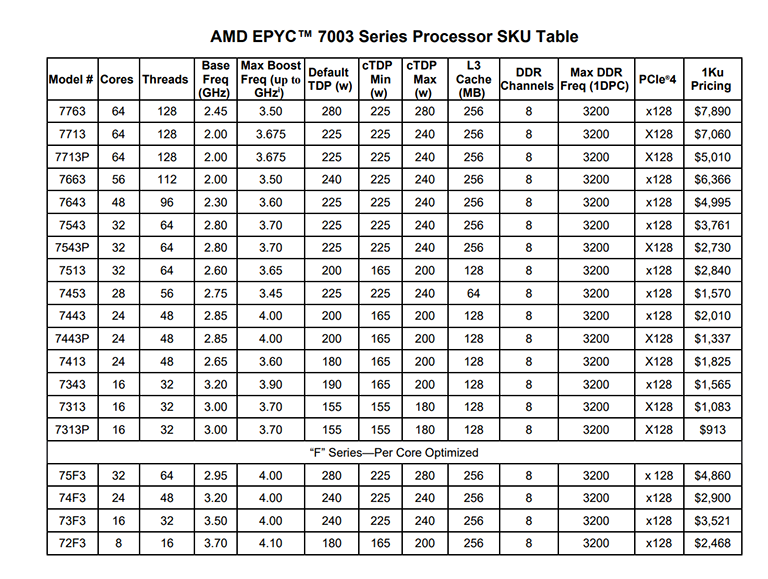

Let's talk about what's really new. AMD is introducing a 56-core, 112-thread processor that doesn't exist in the existing Rome line-up. Built by having seven out of eight active cores in each CCD, it's pretty easy for AMD to hit this desired number. Similarly, there is now a 28-core, 56-thread chip which, at least from a number point of view, goes up against the same 28C56T maximum capability of socketable Intel Xeons. Again, this is a case of having of activating a set number of cores to suit the purpose. They won't all be the same per CCD due to that 28-core requirement, however. We'd hazard it will be four cores active over six CCDs and two active on the remaining two. Priced aggressively at $1,570 though limiting the total L3 cache to just 64MB, AMD's price-to-performance strategy is clear here.

Do be aware that volume purchasers don't usually pay the list price shown above. That's true for Intel chips, as well, so we can take 1K-unit SRPs as indicative rather than gospel.

Unlike Xeons, however, Epycs have a consistent feature-set across the entire stack. All chips offer 8-channel memory support at DDR4-3200, 4TB of addressable capacity, 128 PCIe 4.0 lanes, high-speed Infinity Fabric, and secure memory encryption. In short, the $913 Epyc 7313P has exactly the same base capability as the $7,890 Epyc 7763. Any chip suffixed with a 'P' is designed for single-processor usage only, hence being considerably cheaper than the otherwise identical non-P, dual-socket model.

There's no 12-core or 120W models this time around; AMD's thinking is that it already has this base covered with second-gen processors which will remain in the marketplace alongside third-generation, at least for a while.

Depending upon target environment, per-core software licensing can have a huge impact on total cost of ownership. This is why we see a number of high-frequency parts with what can be termed low core counts. From a general perspective, the total number of offerings adequately cover many server deployment instances, including HPC, database, webserving, software-defined storage, and EDA.

AMD EPYC 7002/7003 Series Processor Configurations | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Model |

Cores / Threads

|

TDP

|

L3 Cache

|

Base Clock

|

Turbo Clock

|

Price

| ||||

| Comparisons | ||||||||||

| EPYC 7763 |

64 / 128

|

280W

|

256MB

|

2.45GHz

|

3.50GHz

|

$7,890

|

EPYC 71H2 |

64 / 128

|

280W

|

256MB

|

2.60GHz

|

3.30GHz

|

$6,950

|

| EPYC 7663 |

56 / 112

|

240W

|

256MB

|

2.00GHz

|

3.50GHz

|

$6,366 |

||||

| EPYC 7643 |

48 / 96

|

225W

|

256MB

|

2.30GHz

|

3.60GHz

|

$4,995 |

||||

| EPYC 7642 | 48 / 96 |

225W |

256MB |

2.30GHz |

3.30GHz |

$4,425 |

||||

| EPYC 7543 |

32 / 64

|

225W

|

256MB

|

2.80GHz

|

3.70GHz

|

$3,761

|

||||

| EPYC 7542 | 32 / 64 |

225W |

128MB |

2.90GHz |

3.40GHz |

$3,400 |

||||

| EPYC 7453 |

28 / 56

|

225W

|

64MB

|

2.75GHz

|

3.45GHz

|

$1,570

|

||||

| EPYC 7413 |

24 / 48

|

180W

|

128MB

|

2.65GHz

|

3.60GHz

|

$1,825

|

||||

| EPYC 7402 |

24 / 48

|

180W

|

128MB

|

2.80GHz

|

3.35GHz

|

$1,783

|

||||

| EPYC 7313 |

16 / 32

|

155W

|

128MB

|

3.00GHz

|

3.70GHz

|

$1,083

|

||||

| EPYC 7302 |

16 / 32

|

155W

|

128MB

|

3.00GHz

|

3.30GHz

|

$825

|

||||

| EPYC 7272 |

12 / 24

|

155W

|

64MB

|

2.90GHz

|

3.20GHz

|

$625

|

||||

| EPYC 7262 |

8 / 16

|

120W

|

128MB

|

3.20GHz

|

3.40GHz

|

$575

|

||||

| EPYC 72F3 |

8 / 16

|

155W

|

256MB

|

3.70GHz

|

4.10GHz

|

$2,468

|

||||

Comparing against similar core-and-thread chips from the second-generation Rome line-up, AMD generally ups the peak frequency. There's not a lot in it, mind, meaning most of the gains will arrive from improvements in IPC. Pricing is a bit higher, too, intimating AMD's increasing confidence in the line-up.

Epycs are clearly high-performance processors first and foremost. There are no sub-155W models listed, the CCD nature of the chips means eight cores and 16 threads is the minimum composition. Rival Intel has way more socketable Cascade Lake-based Xeons available - 73 by our count - whose focus is in the 8-28-core area.

Anyone following the server world will know that Intel is readying Ice Lake-based Xeons for release in the very near future. Promising more cores, higher IPC, and more performance than incumbent Cascade Lake Xeons, we'll only truly know how third-generation Epyc fares against its immediate competition when the new Xeons make their debut next month.

For now, however, the key message is AMD has launched on time with a broad set of drop-in-upgradeable third-generation Epyc processors that offer extra performance through, mainly, the implementation of the Zen 3 architecture. Maximum core-and-thread counts remain consistent, the features-set is generally the same, so one can expect an extra 10-20 per cent performance over second-generation Epycs on a model-to-model basis.