Epyc 7002 Series 2P Implementation And Models

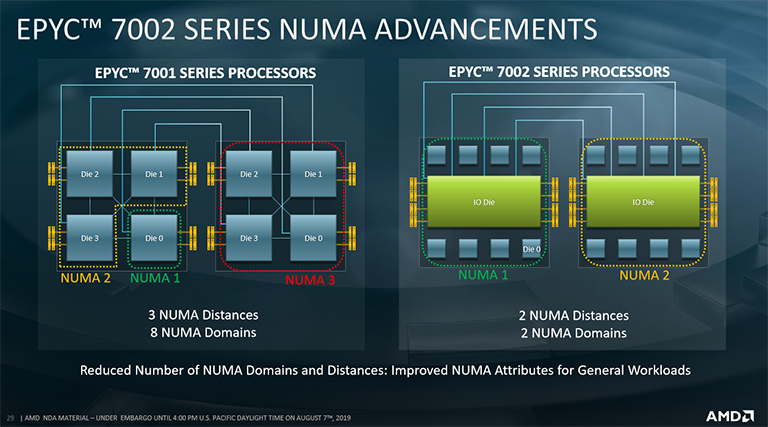

Having a centralised I/O die does indeed help in simplifying the non-uniform memory access (Numa) topology for the new Epycs. The aforementioned crisscrossing of first-gen Epyc leads to three Numa distances - within the die, die-to-die, chip-to-chip - and eight possible domains in a 2P configuration. Some OSes and schedulers therefore have a hard time coping with the complexity for a 2P Epyc 7001 Series system. As everything now feeds into a central die on Epyc 7002 Series, there is only a single domain per processor, and a maximum of two across a 2P solution.

This design feature ought to make performance more predictable across OSes, especially if the new Epycs are tasked to work in an HPC space, where having to optimise for numerous Numa domains is considered a chore.

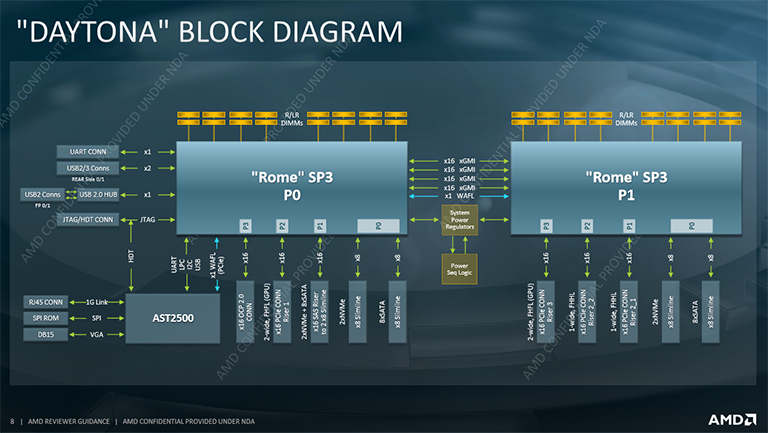

Putting it together, here is how a 2P Epyc 7002 Series solution looks from a high-level viewpoint. AMD still retains the SP3 socket used on first-gen Epyc, meaning these new processors can be dropped into existing infrastructure and work via a BIOS update. Doing so, however, is not ideal, because such a move doesn't take advantage of the new chips' PCIe 4.0 capability that doubles bandwidth. One needs a new, revised motherboard for that.

Each new Epyc still carries 128 PCIe lanes which are fully exposed in a 1P solution. Actually, there are 130 - note the two x1 WAFL in blue - and these are used on first-gen Epyc to connect two processors together via Infinity Fabric. Now that AMD has simplified the design by having a single centralised die, only one of these lanes is needed. That means in a 2P setup, AMD can have a spare lane, x1 PCIe capable, which can be used to connect to the BMC (AST2500). If only using a single processor, a second lane becomes available for this simple task.

Why is this even mentioned? Because most first-gen-supporting motherboards had to break down other PCIe lanes for BMC-connecting duties. The bottom line is that if one buys a 2nd Gen Epyc processor and uses it on a new board, the full 128 PCIe Gen 4.0 lanes are available to high-speed devices, with double the inherent bandwidth of the first generation. One could question why today's servers require 128 PCIe 4.0 lanes. A few cases come to mind, including super-fast NICs of the 100Gbit variety. PCIe 4.0 is more of a futureproofing measure than of widespread applicability right now.

AMD connects two Epyc 7002 Series chips to one other via Infinity Fabric running through 64 bi-directional lanes. That's heck of bandwidth - up from 10.7GT/s to a real-world 18GT/s, per lane, so 288GB/s of bi-directional bandwidth - and in an interesting move, certain OEMs will be allowed to repurpose one of the four x16 links as general-purpose PCIe 4.0, ostensibly for even more storage... not that Epyc is anywhere near short in that area to begin with.

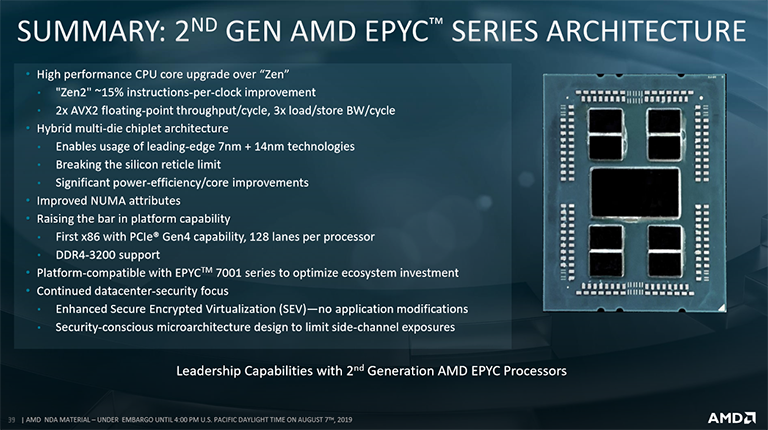

This slide neatly summarises the improvements AMD has made between Epyc generations. It's promising an architecture whose IPC is 15 per cent better, has up to double the cores and threads by dint of using a 7nm process, carries a centralised I/O die that improves upon memory-access variability whilst reducing Numa complexity, and a platform whose PCIe 4.0 support offers up twice the bandwidth. That's a lot for a two-year period.

Enough architecture, let's move on over to how Epyc 7002 Series is productised.

Models

AMD EPYC 7002 Series Processor Configurations | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Model |

Cores / Threads

|

TDP

|

L3 Cache

|

Base Clock

|

Turbo Clock

|

Price

| ||||

| EPYC 7002 Series | ||||||||||

| EPYC 7742 |

64 / 128

|

225W

|

256MB

|

2.25GHz

|

3.40GHz

|

$6,950

|

||||

| EPYC 7702 |

64 / 128

|

200W

|

256MB

|

2.00GHz

|

3.35GHz

|

$6,450

|

||||

| EPYC 7702P |

64 / 128

|

200W

|

256MB

|

2.00GHz

|

3.35GHz

|

$4,775

|

||||

| EPYC 7642 |

48 / 96

|

225W

|

256MB

|

2.30GHz

|

3.30GHz

|

$4,425

|

||||

| EPYC 7552 |

48 / 96

|

200W

|

192MB

|

2.20GHz

|

3.30GHz

|

$4,025

|

||||

| EPYC 7542 |

32 / 64

|

225W

|

128MB

|

2.90GHz

|

3.40GHz

|

$3,400

|

||||

| EPYC 7502 |

32 / 64

|

200W

|

128MB

|

2.50GHz

|

3.35GHz

|

$2,600

|

||||

| EPYC 7502P |

32 / 64

|

200W

|

128MB

|

2.50GHz

|

3.35GHz

|

$2,300

|

||||

| EPYC 7452 |

32 / 64

|

155W

|

128MB

|

2.35GHz

|

3.35GHz

|

$2,025

|

||||

| EPYC 7402 |

24 / 48

|

155W

|

128MB

|

2.80GHz

|

3.35GHz

|

$1,783

|

||||

| EPYC 7402P |

24 / 48

|

155W

|

128MB

|

2.80GHz

|

3.35GHz

|

$1,250

|

||||

| EPYC 7352 |

24 / 48

|

180W

|

128MB

|

2.30GHz

|

3.20GHz

|

$1,350

|

||||

| EPYC 7302 |

16 / 32

|

155W

|

128MB

|

3.00GHz

|

3.30GHz

|

$978

|

||||

| EPYC 7302P |

16 / 32

|

155W

|

128MB

|

3.00GHz

|

3.30GHz

|

$825

|

||||

| EPYC 7282 |

16 / 32

|

120W

|

64MB

|

2.80GHz

|

3.20GHz

|

$650

|

||||

| EPYC 7272 |

12 / 24

|

155W

|

64MB

|

2.90GHz

|

3.20GHz

|

$625

|

||||

| EPYC 7262 |

8 / 16

|

120W

|

128MB

|

3.20GHz

|

3.40GHz

|

$575

|

||||

| EPYC 7252 |

8 / 16

|

120W

|

64MB

|

3.10GHz

|

3.20GHz

|

$475

|

||||

| EPYC 7232P |

8 / 16

|

120W

|

32MB

|

3.10GHz

|

3.20GHz

|

$450

|

||||

Product Stack Analysis

AMD is launching 19 Epyc 7002 Series CPUs today, complementing the 14 drop-in Epyc 7001 Series and eight Epyc 3000 Series embedded chips in the market currently.

As before, all of these processors will run in a single-socket system, but those designated with a P suffix cannot run alongside a second chip on a motherboard. This is why they're cheaper, but otherwise identical, than their two-way counterparts.

AMD addresses the entire stack of the server/workstation market by scaling from eight cores through to 64. Remember the discussion on the previous page regarding how AMD builds these lower-core chips? The amount of a processor's L3 cache provides insight. As each die is home to eight cores and 32MB of cache, AMD tries to use fully-intact dies rather than have cores deactivated per complex.

There are exceptions: the Epyc 7642 appears to use the entire gamut of eight dies, going by the full complement of 256MB LLC cache, but has, we intimate, six out of eight possible cores working in each die. The $575 Epyc 7262 is also strange because it has so much cache - enough for four dies and 32 cores, like the $3,400 Epyc 7542 - but only carries eight active cores. That represents one heck of an expensive way of segmentation.

Thinking generally about how such implementation moves affect memory population, it makes little sense for servers using fewer than 24-core Epyc 7002 Series chips to run with eight memory channels active. Such servers are likely to be more cost-sensitive and therefore will reduce memory footprint accordingly. We can imagine some users choosing the Epyc 7232 and only populate a couple of channels, in the name of TCO, because that chip simply cannot handle the surfeit of bandwidth possible from the eight-channel setup.

There's still plenty of choice as one navigates up the pack, and as before, there are multiple Epyc processors harnessing identical core-and-thread counts, albeit differentiated on frequency and TDP. Speaking to power, the TDPs do rise as more cores and threads are added, as expected. The range-topping Epyc 7742, for example, consumes 225W, compared to 180W for the first-gen Epyc 7601. The difference is that there are now twice as many cores/threads alongside a higher peak speed. This also leads to an eight-die+IOD SoC weighing in at 32-billion transistors taking up 1,000mm² across its 7nm+14nm mixed-geometry production.

Further maximising implementation potential within a wide range of servers, each chip has a configurable TDP that modulates frequency according to power. The top-line 225W TDP parts are not designed to run lower than specification, unlike the others, and there's an extra 15W TDP on tap for those servers with excellent cooling and airflow.