Google I/O 2017 is currently in full flow. Day one completed and already we have some great headlines from the developer conference. Here's an interesting one about Google's own custom processor hardware. Google has just unveiled a new 'Cloud TPU' and it is said to be much more efficient than Nvidia's latest and greatest Volta-based Tesla V100 in training and inference tasks.

Only last month we saw Google ruffle Nvidia's feathers by benchmarking its in-house design Tensor Processing Unit (TPU) chips against Tesla hardware accelerators. Nvidia quickly shot back with its own AI and deep learning tests using a Pascal architecture solution - Google had benchmarked against a Kepler-based GPU accelerator. That did indeed show a better balance of results for Nvidia but also illustrated the more generalist processing capability issue with the Tesla: far greater power consumption for its competitive performance. Just over a week ago we saw Nvidia's latest Tesla V100 accelerator launched, featuring the Volta GV100 GPU, created with dedicated Tensor Cores in the silicon for the first time.

As CNBC reports, the reveal of the Google Cloud TPU last night is "potentially troubling news for Nvidia, whose graphics processing units (GPUs) have been used by Google for intensive machine learning applications." In its most recent financial report Nvidia pointed to fast growth in revenues from AI and deep learning, and even cited Google as a notable customer. Now Google has indicated that it will use its own TPUs more in its own core computing infrastructure. Google is also creating the TensorFlow Research Cloud, a cluster of 1,000 Cloud TPUs that we will make available to top researchers for free.

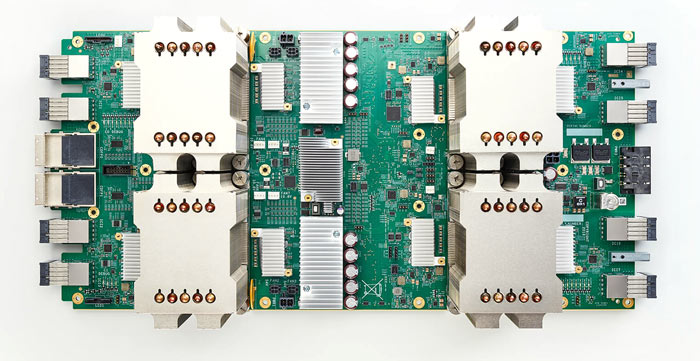

So how do Google's new Cloud TPUs perform? Google says each TPU module, as pictured above, can deliver up to 180 teraflops of floating-point performance. That module features 4x Cloud TPU chips (45 teraflops each). These devices are designed to work in larger systems, for example a 64-TPU module pod can apply up to 11.5 petaflops of computation to a single ML (machine learning) training task. Roughly comparing a Cloud TPU module against the Tesla V100 accelerator, Google wins by providing six times the teraflops FP16 half-precision computation speed, and 50 per cent faster 'Tensor Core' performance. Inference performance of the new Cloud TPU has yet to be shared by Google.

64-device Cloud TPU Pod

Last but not least Google is happy to help with software and will bring second-generation TPUs to Google Cloud for the first time as Cloud TPUs on GCE, the Google Compute Engine. It will facilitate the mixing-and-matching of Cloud TPUs with Skylake CPUs, NVIDIA GPUs, and all of the rest of our infrastructure and services to build the best ML system. Furthermore, Cloud TPUs "are easy to program via TensorFlow, the most popular open-source machine learning framework," says Google.