Yesterday Google kicked off its annual I/O developer conference at the Shoreline Amphitheatre in Mountain View, California. Quite a few interesting news pieces came from the keynote and the first day (of 3), and below we will be looking more closely at news regarding; new Tensor Processing Units (TPUs), Android P, Google Assistant, Google Maps, and Google Lens.

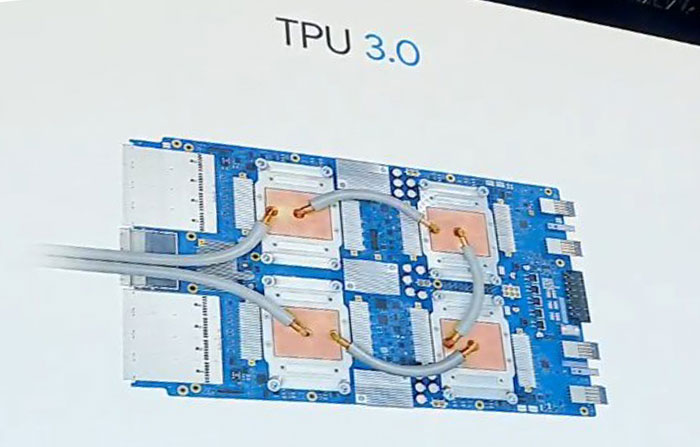

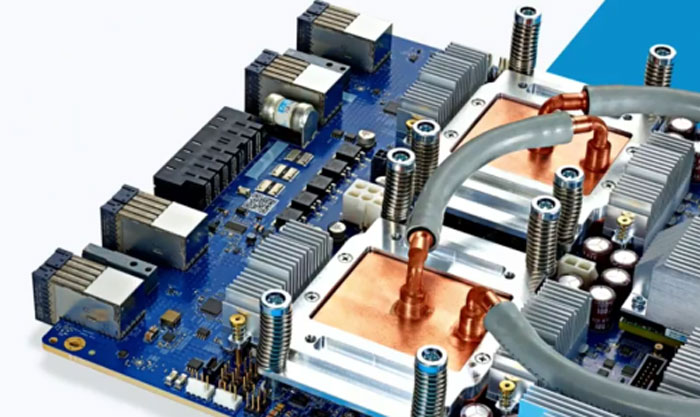

In the keynote yesterday Google announced that it has developed a new generation new Tensor Processing Unit that will be behind a lot of the AI improvements in its key apps, like those mentioned in the intro. The TPU 3.0 will help Google "do things like recognize words people are saying in audio recordings, spot objects in photos and videos, and pick up underlying emotions in written text," reports CNBC. This makes them a big competitor to Nvidia GPUs in AI and deep learning, it is noted.

We don't have that much detail about the new TPUs at this time, just some headline claims. For example Google CEO Sundar Pichai said that the new compute pods, server units stuffed with third generation TPUs, are now "eight times more powerful than last year's version -- well over 100 petaflops". Inside the pods TPUs are liquid cooled, says the source.

It is expected that TPU 3.0 processing power will be made available to third parties via the Google Cloud service.

Android P first came to developers back in early March. A public beta has now been released and it supports quite a qide range of smartphones, not just Google devices:

- Pixel 2 / Pixel 2 XL

- Pixel / Pixel XL

- Essential Phone

- Sony Xperia XZ2

- OnePlus 6 (coming soon)

- Xiaomi Mi Mix 2S

- Nokia 7 Plus

- Oppo R15 Pro

- Vivo X21

HEXUS reported upon the headlining features of Android P in the earlier report, linked above, if you wish to see a recap.

Google Assistant is getting more smart and convenient to use. A minor but useful tweak is the continued conversation feature where after the initial 'Hey Google' or 'OK Google' wake up prompt you can follow up with further questions without this phrase prefix. This more natural interaction method will roll out in the coming weeks.

This summer Google Assistant will be coming to Google Maps. Maps is a very popular application and Google wants it to provide better recommendations for customers, be more personalised based upon your likes and dislikes, and be relevant to your environment. Developing into AR, Google Lens will mix your camera with Street View and Maps data to do things like identify buildings, texts, and even clothes, posters, books and dog breeds.

Google Photos is already quite clever, automatically making collages, movies and stylised photos, and its organisational ability (thanks to image recognition) makes it an improvement on the standard gallery on my Android phone, for sure. App smarts are going to be developed further to include quick fixes for rotations, brightness corrections and colourisation

Google Smart displays, like those above, will launch in July and be powered by Google Assistant and YouTube. These are clearly competitors to Amazon Alexa devices.