GPU Boost v2.0

Temperature is the target

We covered GPU Boost in detail during the GTX 680 review and the basic workings remain the same in v2.0. The card takes the board power into account and if there's sufficient scope to do so, usually when a title doesn't stress the GPU to the limit, the chip increases both voltage and core frequency along a predetermined curve. As an example, our sample GTX 680, which clocks in at a default 1,006MHz, ran at 1,097MHz and 1,110MHz core speeds in Batman: Arkham City and Battlefield 3, respectively.

As a first attempt, GPU Boost remains good, enabling games to pull 170W from a GTX 680. However, according to NVIDIA, after careful evaluation, it was deemed that GPU Boost was shackling the potential of the GPU when the card's temperature was low. Remember, GPU Boost v1.0 takes only available power, voltage and frequency into account; there's no provision for temperature, which is often one of the main limiting factors in a successful overclock - and cool cards are usually able to run at higher speeds than hot ones.

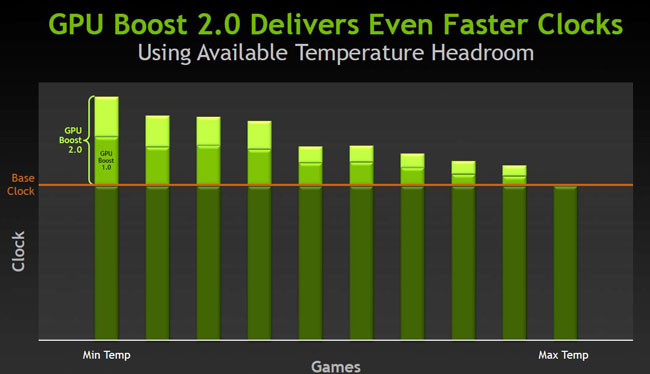

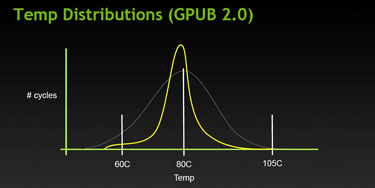

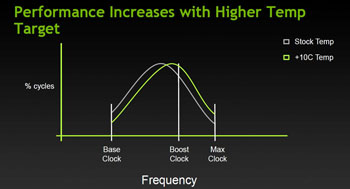

v2.0's main focus moves away from setting the GPU's frequency with reference to a desired power target. Instead, it relies on the more-dynamic temperature as the control. The GPU still jumps up on a pre-defined frequency and voltage curve, but should the chip be cool, the GPU can run faster than with GPU Boost v1.0, as shown by the picture above. The default target temperature is 80°C, though it can be modified by the user - higher for extra performance, lower for quietness - and NVIDIA tunes the fan-speed profile to remain quiet at that threshold temperature.

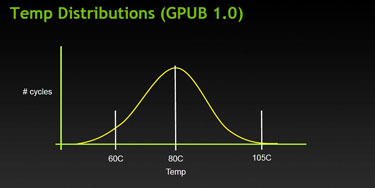

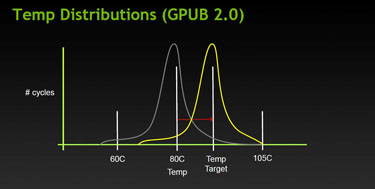

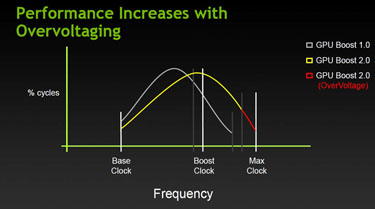

The overriding point here is that the desired temperature dictates maximum board power, instead of GPU Boost v1.0's typical board power, and it becomes the focal point for GPU Boost v2.0. The frequency hike is tuned around this target temperature to a much greater degree than the first-generation version, as shown in the two slides.

Increasing the default temperature target by 10°C, to 90°C, has the effect of pushing the frequency/voltage curve to the right (read higher). You'll sacrifice noise, as the fan will ramp up higher once past 80°C, but, cleverly, the fan-speed profile can also be 'shifted' to the right to keep the card quiet. The trade-off then becomes one of accepting higher board and GPU temperatures in return for more in-game performance, but bear in mind that the GPU has a design threshold of 95°C, meaning you won't want to push the temperature up too far.

Users wanting to further increase the maximum board power also need to push the power slider, contained within third-party utilities, to the right. For example, the TITAN's 250W TDP relates to board power at 100 per cent. Increase this to, say, 106 per cent and available (maximum) board power jumps to 265W. And labouring the temperature-centric point further, watercooled cards, which should run cool, are primed to run at super-high clocks that aren't available in GPU Boost v1.0.

The one key takeway from this section is that GPU Boost v2.0 will extract the maximum performance from the GPU with reference to a defined temperature target. So should an add-in card partner produce a top-class cooler that keeps the GPU 15°C cooler than a competitor's card, the better-cooled card is likely to run at higher clocks; both cards in this example will continue boosting frequency until the GPU hits 80°C.

Push me further

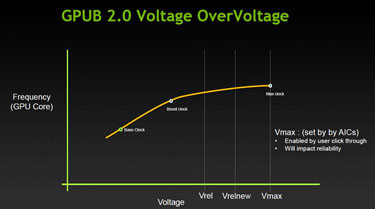

Drilling down some more, remember how the original GPU Boost enables the user to crank up the power target and also increase, to some degree, the voltage? This upper-voltage figure is now governed more by temperature than the usual power target, leading to maximum standard voltage rising from Vrel (GPU Boost v1.0) to Vrelnew. v2.0 also goes further by enabling the TITAN to be over-volted past the usual limits set by GPU Boost v1.0 (Vmax). However, add-in board partners may limit this over-volting - adventurous ones will offer high voltages - and the user will need to understand that over-volted cards have more chance of dying prematurely.

Conjecturing somewhat, we're likely to see GPU Boost v2.0 baked into present cards through a BIOS update; there seems to be no technical reason why 6-series GeForces can't run the newer Boosting capability. We'll be looking at GPU Boost v2.0 in a real-world sense when the full performance review goes live.

Overclock my screen, TITAN

TITAN also introduces a Display Overclocking feature, used in conjunction with Adaptive V-Sync. Most displays refresh at 60Hz and it's advisable to run with V-Sync on to reduce tearing and artifacting that results from the mismatch between GPU output and display refresh rate. Adaptive V-Sync helps minimise this problem but can't get rid of it completely. Users wishing to run higher refresh rates alongside V-Sync can now attempt to force the display to run at 80Hz, instead of 60Hz, through a feature called Display Overclocking, enabling higher framerates without noisome tearing. As we understand it, the GPU sends the request to the monitor but there's no guarantee that it will work on every display.