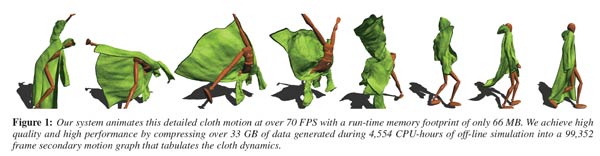

Researchers have demonstrated the Near-exhaustive Precomputation of Secondary Cloth Effects at SIGGRAPH 2013. A published research paper and a video are available detailing the findings. The approach the researchers, from Carnie Mellon and Berkeley Universities, took was "entirely data-driven", with the animation possibilities pre-rendered and stored.

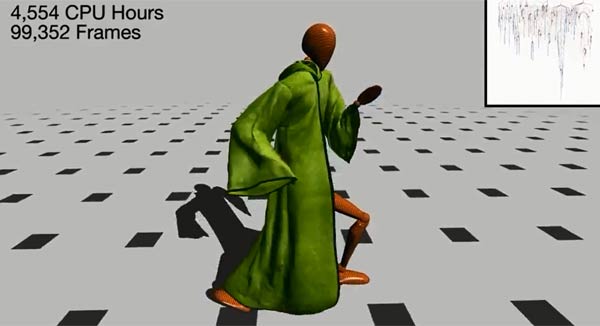

The scientific team challenged the assumption that pre-computing "everything" about a complex space was impossible. Several thousand CPU-hours were used to calculate the cloth animation and "perform a massive exploration of the space of secondary clothing effects on a character animated through a large motion graph." The cloth animation could then be used to dress a character which was moved in real time through a variety of poses and actions. Please check out the video embedded below.

Hoodies are particularly problematic

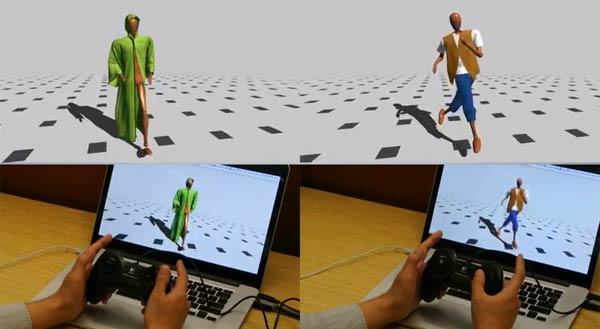

The team seemed happy with their cloth animation quality in the video example above as the pre-computational time surpassed 4,500 hours and the animation reached nearly 100,000 frames. The tens of gigabytes of data used to deliver the animation could be compressed to only tens of megabytes. As such the team thinks it is a reasonable proposition to deliver this "high-resolution, off-line cloth simulation for a rich space of character motion" as part of an interactive application or video game.

It is the "rapid growth in the availability of low-cost, massivescale computing capability" that has allowed the team to challenge the preconception that pre-computing almost everything is not practical. Adrien Treuille, associate professor of computer science and robotics at Carnegie Mellon, spoke to TechCrunch; "The criticism of data-driven techniques has always been that you can’t pre-compute everything," he said. "Well, that may have been true 10 years ago, but that’s not the way the world is anymore."

The test system used by the researchers was an Apple MacBook Pro laptop (Core i7 CPU) which easily coped with the 70fps or faster animation playback and interacting with the joy-pad movements. The researchers thought that the run-time memory requirements of about 70MB for this animation are "likely too large to be practical for games targeting modern console systems (for example, the Xbox 360 has only 512 MB of RAM)", however the requirements are "modest in the context of today’s modern PCs" and next-gen consoles.