Several years ago a small team of researchers at Google began working on a simulation of the human brain. To test the system the researchers built a system to recognise faces in images, which may or may not contain faces, at various sizes and rotations. The system can also be trained to recognise higher-level concepts such as cat faces and human bodies.

Google’s neural network computer system uses “a cluster with 1,000 machines (16,000 cores”). The researchers say the new recognition system “obtains 15.8% accuracy in recognizing 20,000 object categories from ImageNet, a leap of 70% relative improvement over the previous state-of-the-art.” Also the system was set loose on 10 million random 200x200 pixel thumbnail images taken from YouTube content. The recognition system successfully identified and grouped cat faces.

The Google team was lead by Stanford University computer scientist Andrew Y. Ng and the Google fellow Jeff Dean. Dr Dean explained the system; “We never told it during the training, 'This is a cat,' it basically invented the concept of a cat. We probably have other ones that are side views of cats.” Dr Ng added “The idea is that instead of having teams of researchers trying to find out how to find edges, you instead throw a ton of data at the algorithm and you let the data speak and have the software automatically learn from the data”. This is supposed to be more like how biological brains learn recognition.

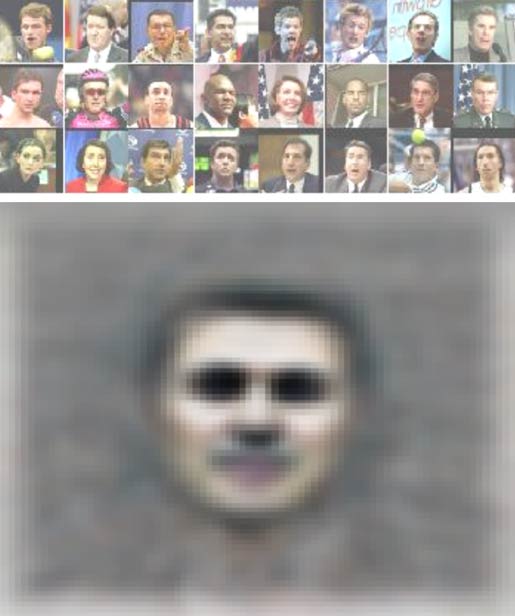

The optimal face stimulus

If you look at the human face recognition example above it’s easier to understand what the computer system learning has achieved. The computer has distilled many human faces images into “The optimal stimulus according to numerical constraint optimization.” In the paper the human face neuron picture seems a lot more distinct than the cat face and human body one I’ve also taken a screenshot of below.

The optimal cat face and human body stimulus

The 16,000 CPU computer network is still dwarfed by the capability of the human brain and the researchers said “It is worth noting that our network is still tiny compared to the human visual cortex, which is a million times larger in terms of the number of neurons and synapses”. The research paper will be presented at ICML 2012: 29th International Conference on Machine Learning, Edinburgh, Scotland, June 2012.