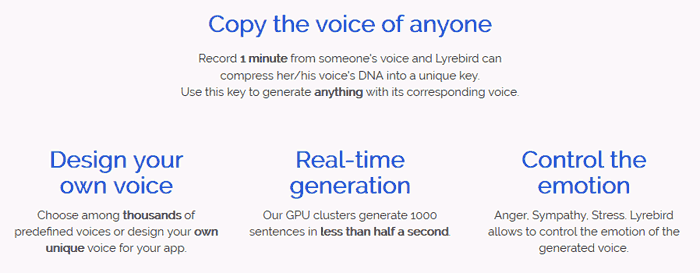

Montreal-based Lyrebird has published a microsite showcasing its voice imitation algorithms. The AI-startup claims it has developed an API which will let you synthesize speech using anyone's voice - from just a minute-long recording of the person. The speaker doesn't need to say any off the words contained in the audio generated. Furthermore, you will be able to select emotions for the speech, such as anger, sympathy, or stress.

In the main demo from the showcase page at Lyrebird.ai, embedded above, you get to hear a synthesized voice switch between Barack Obama, Donald Trump, and Hillary Clinton imitations. There are plenty of other examples to check over. This is pretty impressive for real-time generation and voice imitation switching, though its easy in some segments to detect the 'robotic' speech sound.

As TNW reminds us, Adobe showcased similar voice mimicking tech last year, under the name of Project VoCo. However, Adobe's tech required about 20 minutes of user speech for its mimicking tasks plus its software package installed on the client system. Lyrebird only needs a minute long recording and will shortly launch a cloud based API service for you to upload audio and download your synthesized speech.

Lyrebird says it sees a variety of applications for its tech; "for personal assistants, for reading of audio books with famous voices, for connected devices of any kind, for speech synthesis for people with disabilities, for animation movies or for video game studios."

If, reading this, you are thinking of fakery, voice forgery, and other ethical questions - Lyrebird has published an ethics page where it talks about the validity of voice recordings used as evidence. However, outside of the courts and similar legal considerations, this tech will still have plenty of potential for mischief making.

The technology being the Lyrebird API relies upon deep learning models developed at the MILA lab of the University of Montréal. Lyrebird's trio of founders are currently PhD students at the university.