How often have you sighed when watching Hollywood crime fighters zoom in to a blurred / grainy / pixelated image to reveal sharper and clearer details? Some level of suspension of disbelief is necessary to enjoy sci-fi but current day crime dramas should stay clear…

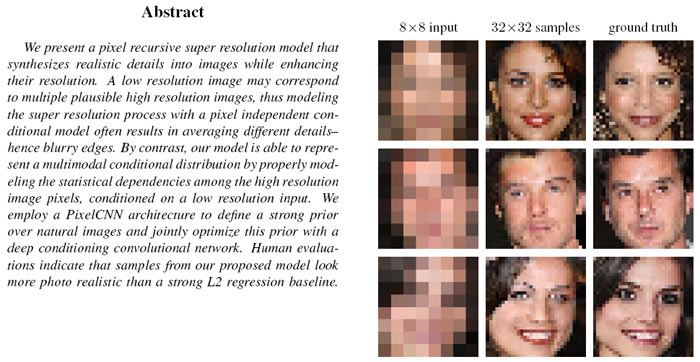

Now the Google Brain team might be onto something similar to the above fantasy technology with its 'Pixel Recursive Super Resolution'. For a quick understanding of the synthesising model capabilities have a look at the image matrix and abstract below.

Somehow, almost incredibly, the pixel recursive super resolution model takes the 8x8 pixel inputs shown above (left column) and produces the 32x32 pixel samples shown alongside (middle column). The accuracy is rather good when compared against the 'ground truth' unpixelated original image in the final column.

The Google Brain team behind the model explains that previous models have averaged various details to produce blurry indistinct images, little more useful than the pixelated input. In contrast the pixel recursive super resolution model uses two neural net processes in concert to create a sharp and recognisable image. To create these images the model must make hard decisions about the type of textures, shapes, and patterns present at different parts of an image.

First of all, the model maps the pixels in the lo-res sample to a similar high resolution one using a learned 'conditional network' ResNet process. This narrows down options for the next neural net process to work on. The second 'PixelCNN network' process looks to add detail to the pixellated image based upon similar source images with similar pixel locations. When both neural net processes have run the image data is combined to provide the 32x32 samples in the main image above.

Currently the 'Pixel Recursive Super Resolution' model is trained up for working on cropped celeb faces and hotel bedrooms.