AMD has announced the AMD Multiuser GPU at VMWorld 2015 in San Francisco. This is the "world's first hardware-based GPU virtualization solution," claims AMD. The system allows up to 15 users to share a single AMD GPU. It is based upon the open industry standard SR-IOV and is designed to "overcome the limitations of software-based virtualization," offered by other vendors.

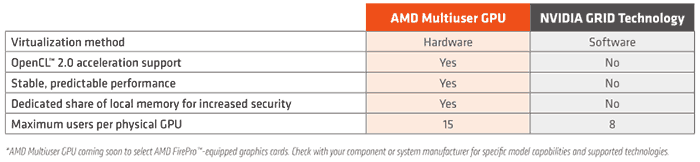

AMD Multiuser GPU is said to enable "consistent, predictable, and secure performance from your virtualized workstation with the world’s first hardware-based virtualized GPU solution." Users sharing the GPUs will enjoy workstation-class experiences with full ISV certifications and local desktop-like performance. With AMD's hardware virtualization users won't be limited to what they do in the virtualised environment, as they have full access to native AMD display drivers for OpenGL, DirectX, and OpenCL acceleration.

Thanks to its hardware-based model, AMD says that its system is much more secure than rival virtualization systems. It claims that it is "extremely difficult for a hacker to break in at the hardware level," but software-based virtualization can be exposed or breached to access guest VMs in an unauthorised way.

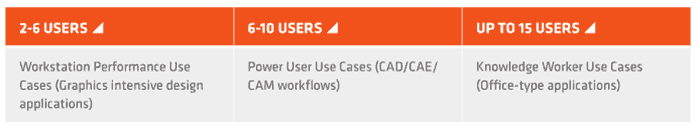

As mentioned in the intro, a single GPU can handle 15 client machine users. For more intensive use, like in graphics design obviously fewer users are supported per GPU. AMD made a chart of typical scenarios, below. In any use case one user can't hog all the GPU.

AMD says that Multiuser GPU is easy to set up. A compact and efficient Hypervisor driver provides the UI to implement and configure the AMD Multiuser GPU. It works in environments using VMWare vSphere/ESXi 5.5 and up, with support for remote protocols such as Horizon View, Citrix Xen Desktop, Teradici Workstation Host Software, and others. AMD Multiuser GPU is "coming soon".

While AMD doesn't mention the rival Nvidia GRID technology directly in its references to 'other software-based virtualization' systems at least one chart does, I've embedded it below. The latest Nvidia GRID 2.0 virtualization tech doubled its maximum users per server so it might compare better now.