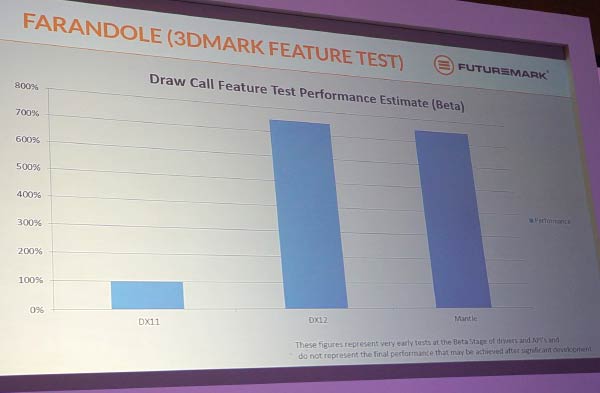

One of the key tests in the HEXUS graphics card review and assessment suite is Futuremark's 3DMark. This synthetic benchmark is updated quite often but we hear of a significant update coming in the not-too-distant future that will include 'Farandole', a test to compare the performance of the DirectX 12 and Mantle APIs.

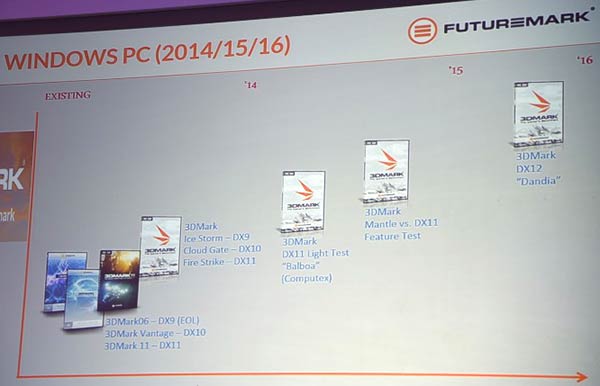

According to a 3DMark roadmap shown at AMD's recent 'Future of Compute' event, the DirectX12 vs Mantle API comparison tests will come in a version of 3DMark to be released next year, called 'Dandia'. It is understood that both DX12 and Mantle can offer at least 7.5 times the amount of draw calls than DX11 can handle. Thus new tools to measure the performance of these APIs at their limits will be useful until we see a good selection of applications and games that use them in the 'real world'.

Despite there being a relatively long time until 2016, Futuremark showed a slide of an early 'Farandole' test, which will be in the 'Dandia' software release. You can see that the draw call feature test performance (estimate, above) for both DX12 and Mantle is about 7.5 times that of DX11. If you can't read the small-print in the slide it says "These figures represent very early tests at the Beta Stage of drivers and APIs and do not represent the final performance that may be achieved after significant development."

It's interesting to see these tests concerning draw calls in the wake of the Ubisoft Assassin's Creed: Unity problems. During its squirming, Ubisoft said that the graphics performance of the game was being "adversely affected by certain AMD CPU and GPU configurations". However other sources thought the nub of the problem was the game issuing "tens of thousands of draw calls -- up to 50,000 and beyond," causing a juddery experience, even on the best kitted out PCs running DirectX 11. So Ubi could have created a perfectly smooth gaming experience using the currently available AMD Mantle API...

Back to the Futuremark tests. The roadmap shows that the 3DMark Sky Diver was codenamed 'Balboa' before its release and the names given aren't the final public names. Futuremark told WCCFTech, on the subject of possible bias, that "AMD, Intel, Microsoft, NVIDIA …all have the opportunity to inspect the source code and suggest changes. As a result, we're confident that the 3DMark API Overhead feature test will be the fairest way to compare these new APIs."