Interfacing with Precision XOC

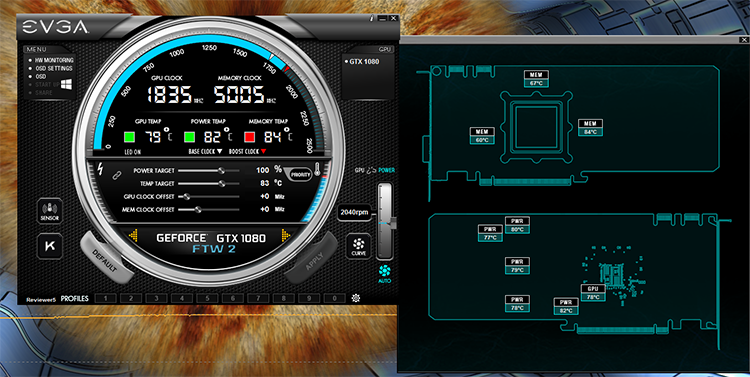

Putting the maximum stress on the card, more so than with games, is achieved by running a program like Furmark. After 10 minutes or so, the GPU gets close to 80°C while the left and top memory blocks remain relatively cool, at around 65°C. The block closer to the VRMs becomes much warmer, exceeding the GPU even, while the six power sensors all report similar figures. What's interesting is that the right-hand fan does run asynchronously - sometimes a little slower and sometimes a little faster - than the left-hand fan designated for the GPU. And once you remove load by closing the application, the GPU cools down more quickly than either memory or power, meaning the second fan runs at a higher RPM for longer.

Not as hot as you would expect

We also ran a couple of normal games - Deus Ex: Mankind Divided and Doom - to see how the overall temperature landscape changed with lesser load. The GPU temperature dropped to 69°C while memory peaked at 63°C and power at 60°C. What's telling here is that the temperature for the memory and power is substantially lower than with Furmark. Either the heatsink is doing a fantastic job in keeping them in check, or temperatures for those components aren't as much of a concern.

You may think that having asynchronous fans would cause some level of turbulence and more noise. It just so happens that we have the original GeForce GTX 1080 FTW, clocked in at the same frequencies, on hand for comparison purposes. Both cards boosted to near-identical speeds, as you would expect, though the FTW2's peak in-game temperature was 3°C lower. What's more, with both cards enclosed in our 'quiet' NZXT S340 gaming chassis and powered by a 1,000W PSU whose fan doesn't turn on, the original FTW's noise profile peaked at 34.2dB while the FTW2, with asynchronous fan support, came in at 32.6dB. We couldn't readily detect the difference in fan speeds for the FTW2, with both cards producing what's best described as a low hum.

Thoughts

EVGA has realised that predicating cooling based on the temperature of the latest GeForce GPUs is not the best way forward. The energy-efficient nature of the 10-series GPUs means that, sometimes, the hottest-running components are memory and power regulation. So having some reporting on them makes implicit sense. In a broad context this is what the new iCX range does. Allied to a new, improved cooler and a custom PCB featuring nine additional sensors and a safety fuse - off the back of the card-burning issues reported last November, no doubt - iCX splits the fans' cooling workload by focussing on either the GPU or memory/power.

The move is merely interesting at this juncture in time, but given that EVGA produces chassis, power supplies and CPU coolers, just how iCX will tie into company-wide products is more intriguing. Our basic results have shown that iCX is a better cooler than the already-capable ACX, and appreciating that it will cost $30 or so more at retail from the end of February, it makes the most sense on the GTX 1070 and GTX 1080 GPUs. Who knows, you may see it on a GTX 1080 Ti sooner rather than later.

HEXUS.where2buy:

EVGA GeForce GTX 1080 FTW2 Gaming iCX 8GB - Scan Computers

EVGA GeForce GTX 1070 FTW2 Gaming iCX 8GB - Scan Computers

EVGA GeForce GTX 1080 SC2 Gaming iCX 8GB - Scan Computers

EVGA GeForce GTX 1070 SC2 Gaming iCX 8GB - Scan Computers