After years of development, throwing-in the towel (Larrabee) and starting again, Intel has at last revealed its first entry into massively parallel comuting, the Xeon Phi Coprocessor.

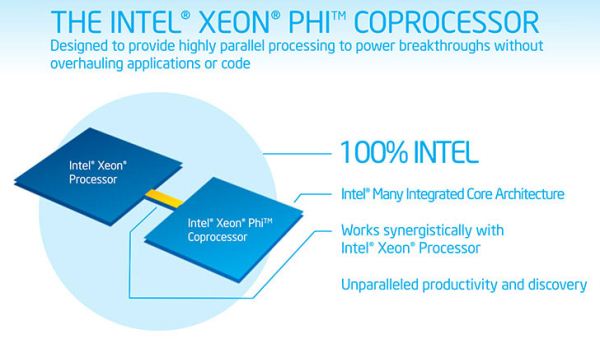

The firm is being rather sketchy on the fine details at this stage. We know that the Phi will contain at least 50+ cores, that they'll be manufactured on a 22nm process and that on-board RAM will begin at 8GB GDDR5, beyond this, little is known about the hardware other than a few architectural hints. The firm is claiming performance of around one teraflop of real-world double-precision, FP64, floating-point number-crunching power.

To place this claim into perspective, NVIDIA's current high-end Tesla offering provides a double-precision peak of 665 gigaflops, though NVIDIA's upcoming K20 range, based on a modified GK110 Kepler design, is expected to achieve around two teraflops. Bear in mind however, that NVIDIA's figures are theoretical and Intel's real-world, so we may have to wait until benchmarking to know the true performance of these devices.

It's suspected that the Xeon Phi processors we be based on enhanced Pentium 1 cores (P54C) with additional vector hardware, which, with the device capable of 512-bit SIMD calculations, suggests a 16-wide ALU in each core. The die itself is expected to be rather large for an Intel product.

What's perhaps most interesting is that the Xeon Phi will run its own linux-based operating system, which Intel claims will be of particular benefit to cluster users. This means that the Xeon Phi can run as both a standalone and coprocessor device, a one-up for Intel against the likes of NVIDIA and AMD.

We expect the Xeon Phi to hit the market later this year, where we're eagerly awaiting to see how it faces-off against NVIDIA's K20 Tesla.