Performance

So now that we know more about what makes Epyc tick - lots of cores, lots of threads, lots of bandwidth, and lots of IO - the fundamental question is how well does it perform against Intel Xeons of the same cost?

Answering this question is somewhat difficult because Intel is transitioning its own line-up from Broadwell-based Xeon v4 processors through to a Skylake-infused parts debuting very soon. Of course, AMD can only compare with what's out the market, but it was at pains to point out that first-generation Epyc has been built to outperform current Xeons and be competitive against whatever Intel has up its sleeve in the near future.

The best way to benchmark server chips is to take, say, the 50 most common workloads in a datacentre environment - with a mix of off-the-shelf and proprietary code - and run them across price-equivalent Epyc and Xeon chips.

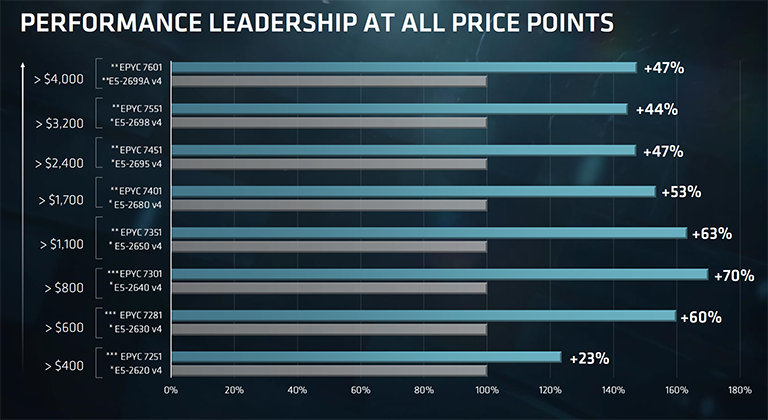

AMD, however, presented us with numbers based on performance in the industry-standard SPECint and SPECfp benchmarks run with a standard, non-company-optimised GCC compiler. Intel will doubtless claim that its own compiler offers better results, AMD will counter that forthcoming optimisations should put Epyc in a better light, so GCC-based benchmarks level the playing field somewhat.

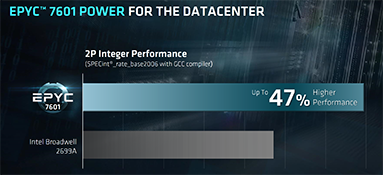

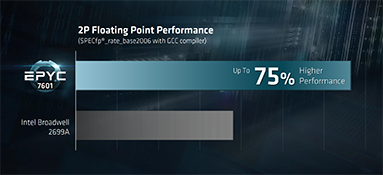

What we see is that the top-line Epyc chip offers, on average, 47 per cent more integer performance and 75 per cent more floating-point performance than the best Xeon v4 chip today, the E5-2699A. AMD's chip has 32 cores and 64 threads while Intel's has 22 and 44, respectively, so AMD obviously as a sheer compute advantage. Other reasons why Epyc does well is because the eight-channel memory architecture can stretch its legs, especially in floating-point operations; Intel has only half the amount of bandwidth on tap, remember.

It must be noted that SPEC is a highly-optimised benchmark that scales very well on multiple cores. It's also very efficient for non-uniform memory accesses (Numa) architectures - Epyc has eight Numa domains across the chip. Appreciating this, SPEC is less sensitive to how a processor goes about grabbing memory bandwidth, be it from local memory per die or if traversing across the chip for accesses. Whatever the case, AMD clearly makes a case for performance leadership on a dollar-to-dollar basis.

Based on aggregate SPEC performance and with pricing factored in, AMD claims leadership at every point, and though comparing $4,000 processors is interesting, the volume server CPU market is around the $1,000 mark. Here, AMD reckons it has a massive 60 per cent performance lead. It's worth bearing in mind that this is compute performance only - other benefits, such as higher IO, are not taken into account.

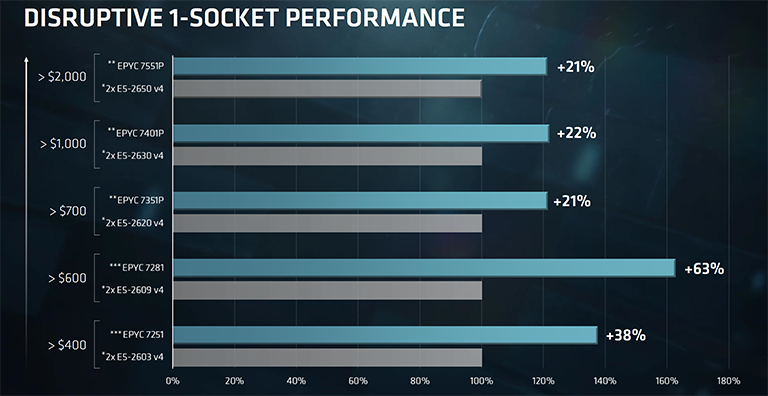

How about looking at systems where a single Epyc can be used in the place of two Xeons? The extra compute performance means that, according to its own data, Epyc wins everywhere. This slide is important because some Xeons are used in a 2P environment not for pure performance but, rather, to increase the available IO. Epyc has 128 PCIe lanes as standard.

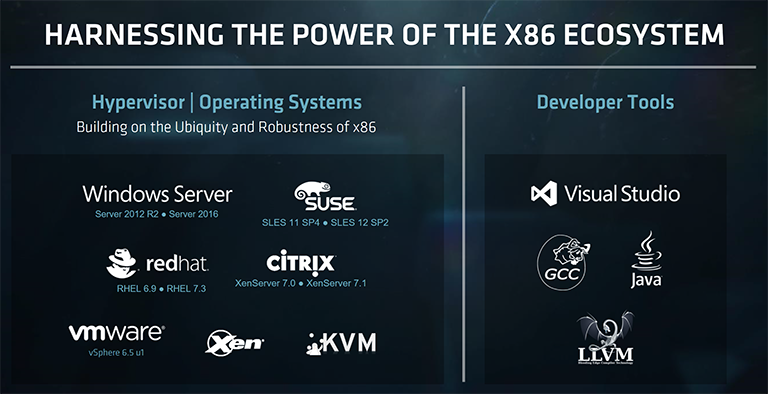

Having a cutting-edge architecture and performance hegemony is only one part of the server challenge. Ensuring that the operating system and hypervisor guys have optimised popular software is also key. AMD doesn't have the kind of financial clout that Intel possesses, especially with respect to optimisation, so we'll be watching how well popular OSes and workloads perform on Epyc from day one.

Key takeaways

Make no mistake, Epyc is AMD's most important CPU launch in well over a decade. Whist having Ryzen on the desktop is a step in the right direction, AMD has practically no market-share in the server space - most estimates put it as low as two per cent.

The higher average selling price of server chips translates to fundamental revenue and profit opportunity for AMD, because if you start at near zero, the only way is up.

However, producing large-die silicon used for monolithic designs becomes increasingly more difficult as extra cores and caches all need to be kept into check. AMD chooses to go with multiple smaller dies to enable higher yields. Zen, the architecture behind Epyc, is designed primarily for the server space in mind.

Epyc is able to harness an Intel-beating core and thread count by being built through that multi-chip module architecture, where four Zen-based dies are connected to each other. Such an approach only works if the inter-die connection is super-fast and low latency, and here is where AMD is putting great store in its in-house-developed Infinity Fabric.

In keeping with a balanced design that provisions for plenty of IO, AMD equips Epyc with 128 lanes of PCIe that can run a whole host of storage or graphics options, handily beating Intel's current generation... even though Epyc's SoC setup means it doesn't expand its storage options through a separate PCH, like Intel.

And that balance also requires heaps of memory bandwidth. Each die accesses dual-channel memory that aggregates to eight channels running at up to 2,666MT/s. Compute, IO, memory and efficient power are the key drivers of server performance; AMD appears to have a solid all-round design in Epyc.

Yet we still approach Epyc with some caution. Intel is yet to release its next-generation Xeons that also promise more of everything. We don't yet know how well Epyc's MCM approach holds up against workloads that are not Numa optimised and therefore might expose architectural weaknesses encountered when not building a single monolithic chip, as Intel is doing.

For example, SQL databases are inherently non-Numa optmised, so getting into the like of Amazon Web Services and its clients will be difficult. Intel, too, can do more to leverage the software-defined storage market.

And, of course, AMD has a rich history of producing compelling technology that, for one reason or another, doesn't have the market-share impact it deserves. This is an area where Intel's deep pockets and relationships with OEMs and datacentre owners may prove to be key.

However, having spent a couple of days at the Epyc launch, our final thought is that AMD's Epyc has some serious performance game. Let's now wait and see if the server space plays ball.