Introduction

If you're into the subject enough and have the analysis skills, you can probably make a good case for a GeForce family tree that starts before the brand existed, Riva TNT providing the basis for a lineage that's seen NVIDIA through to 7th generation 'Force and serious success with the likes of the GeForce 7800 GTX. Little bits of the old always show up in the new, NVIDIA taking the Good Stuff™ and putting it into new chips, helping to make Better Stuff™.

And why not, eh? There's no point throwing out hundreds of millions of dollars of R&D investment and forcing your chip architects to look directly into the MiB Neuralizer before you start on the next one. Except when you're faced with the next evolution of programmable shading of course, which involves no less than the single biggest inflection point in the history of consumer graphics, and possibly computing as a whole. The Microsoft Windows Vista behemoth/juggernaut/goliath/other-massive-assed-thing is just about to careen into planet PC, big time. It brings with it the 9th generation of DirectX (called the Game SDK way back when!), called (intuitively) DirectX 10, which houses the 3D component called Direct3D 10 (D3D10).

Shiny new D3D10 accelerators are thus the next big thing on the PC graphics radar, the new API changes and future plans requiring a shift in the way you want to go about building a programmable shading architecture to service it. It's always really been on the cards that NVIDIA would get there first, dropping new silicon that gets the job done before (and thus ready) for the launch of Vista into the consumer space. Thing is, as I sit and type this for you to read, Vista hasn't been released to manufacturing (although it's close) and thus D3D9 is still the big dog in terms of graphics APIs, snuggly plugged into many a Windows XP PC.

Some eejit once said that any new graphics architecture must run all existing codes better than anything that precedes it, before it gets down to the job of running the new stuff, or words to that general effect (if I quote him directly he'll only get a bigger head). Said eejit is right, too. The world of PC graphics dictates that backwards compatibility is mandatory in hardware, if not always in software as we're seeing with Vista. So these first generation of D3D10-supporting parts must also slide into D3D9-based Windows XP PCs and do the job there as well, as gamers cross the transitionary divide from one OS to the other.

Hell, there are some gamers who'll buy the first gen of D3D10 parts and run nothing but D3D9 codes on them for the entirety of the lifetime the board sees service. Therefore this intro starts to make some sense. If you hadn't figured it by now, NVIDIA's next gen graphics architecture takes its first curtain bow today. It supports D3D10 of course, but timing (and availability of a damn driver) dictates we stick to Windows XP and D3D9. And if you've been paying the requisite attention you'll realise that there's a good chance the new architecture is pretty much brand new, with no -- OK, OK, very little -- historical throwback to NV chips of yore.

So we get the awesome task of abusing the new silicon to tell you what's up in this first chapter of the next generation of PC graphics. And that means we concern ourselves with a chip codenamed G80, Windows XP and D3D9, and a bunch of old and new games. The ride will require you to pay a bit of attention here and there, which we unashamedly don't apologise for, but then this is probably the most complex piece of mass-market silicon ever engineered.

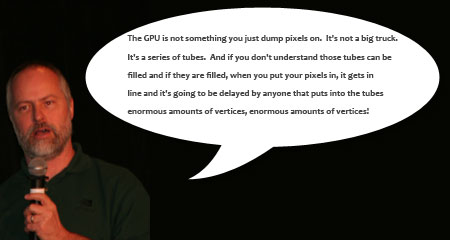

So, without further ado, let's talk about Kirk, Tamasi, Lindholm, Oberman, et al's bunch of tubes....