Prescott is upon us !

One of Intel's chief aims with the Pentium 4 architecture was to ensure that it had considerably longevity through excellent scalability. The NetBurst architecture, a collective term that encompasses some of the processor's strengths such as Hyper-Threading, 800MHz system bus, and 512kb of L2 Advanced Transfer Cache, has allowed the Northwood CPU to easily outpace the earlier Willamette model. Intel also touts Hyper-Pipelined technology as a feature, although the extent to which a significantly longer instruction pipeline benefits immediate performance is highly questionable. It's also an issue that affects the Prescott CPU greatly.A first look

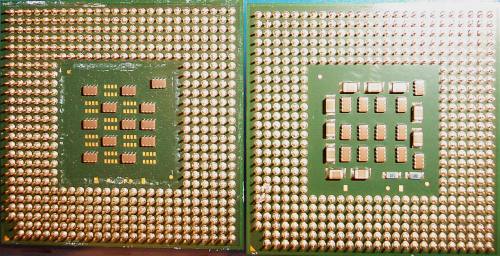

The lower shot shows the Prescott and Northwood CPUs together, with the Prescott on the right. The test sample was a 3.2GHz Engineering Sample with 14-16x multipliers. The Prescott Pentium 4s will be introduced at 2.8, 3.0, 3.2, and 3.4GHz speeds, respectively. The 2.8GHz version will be available in 133MHz (without Hyper-Threading support) and 200MHz Front-Side Bus versions, differentiated by the naming reference of either 2.8A or 2.8E GHz. All other CPUs in the range will have the letter E tacked on to the name, presumably to avoid confusion with the similar Northwood models.

Note the standard Socket-478 package that's been home to every single Northwood Pentium 4. That's about to change in the near future. The very first batch of Prescotts will use the familiar S478 micro Pin Grid Array (mPGA478) packaging, just as you see above. However, Intel has made definite plans to move to the Land Grid Array 775-pin packaging for Prescott speeds in excess of 3.4GHz. That also spells the end of the current Northwood line, for it won't be the recipient of the packaging and socket change. Codenamed Grantsdale and Alderwood, Intel has compatible chipsets at the ready now. They're slated for launch at the back end of March. This also infers that whilst your shiny new Canterwood may comfortably support the first run of Prescotts, you'll need to invest in another motherboard if keeping up with cutting-edge CPUs is a priority. We can understand Intel's rationale here. The Prescott needs to sell well initially, ergo it needs to be in packaged in current style and immediately usable on the thousands of high-end Pentium 4 motherboards. Intel also recognises that LGA-775-pin packaging is perhaps a necessity for the ultra-high speeds the Prescott is scheduled to hit.

Improvements and differences ???

Rather than spell out every single feature inherent in the Prescott core, it's prudent to focus on the main differences between it and the present Northwood - an evaluation of which should yield important information with respect to probable performance and scalability. We've covered the imminent package change above. Let's take a look at some of the more notable others.

90-nanometer process technology

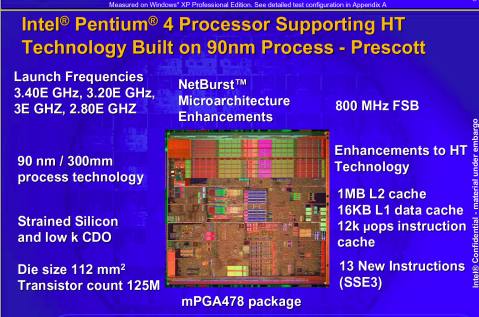

A reduction in size of the manufacturing process technology is a key method of reducing operating voltage. The ability to fit more chips per given wafer also accrues financial advantages. The present Northwood is based on 0.13-micron (130 nanometer) technology. With millions of transistors on such a small process and the inherent characteristic of smaller transistors being able to switch (operate) faster than larger ones, Intel is now using lithography-etched transistors with a gate length of just 50nm (0.05-micron) and oxide layer of around 1.2nm. This compares favourably with the Northwood's 60nm gate length and 1.6nm oxide layer. Intel is now also using strained silicon between the substrate and transistor, silicon which is literally pulled further apart to reduce electrical resistance (atoms are further apart, as I understand it) and allow for current to travel faster. The upshot is naturally faster, low-power transistors augmented by a faster electron flow. As you may have guessed, these two factors combine to increase the frequency at which the chip can operate. We fully expect to see air-cooled 4.5GHz Prescott CPUs. As usual with Pentium 4s, the Prescott will be available in a multivid configuration, with voltages ranging from 1.25 to 1.4v.

Deeper pipeline

A modern CPUs life is all about maximising its resources to the absolute fullest. A bad architecture is one where parts of the instruction execution sequence are left idling, waiting to be used again. To put it very simply, instructions are fetched from main memory, decoded into a form that's recogniseable to the CPU, executed, and then stored back into memory. A channeled pipeline is required to the CPU satiated. For example, whilst one instruction is being carried out another can be loaded up behind, ready to be executed. In order to be completed some instructions require information from others yet to be fetched. This is where intelligent prefetching comes in. In short, a pipeline is good; it keeps the CPU busy by letting it get on with the menial adding, subtracting and multiplying work (execution) whilst fetching the next instruction from memory. The Pentium 4 Northwood uses a 20-stage pipeline, and execution doesn't occur until stage 17. The upside is that each stage does such little comparative work that it can be run at very high clock speeds. The downside is that it takes a long time for an instruction to execute and the result stored. A trade-off of pure MHz speed and work accomplished per cycle.

The other major problem with a long pipeline is in not keeping it, for want of a better expression, fed correctly. The Pentium 4's Prescott integer pipeline length has been increased to a staggering 31 stages. That infers even less work per stage and higher possible clock speeds. It also raises the possibility of severe performance penalties for branch misprediction, which is where the CPU guesstimates which instructions will be needed next. If it guesses wrong, which it does less than 10% of the time, the instruction that's been sent for processing is useless. It needs to be flushed out which, in a 31-stage pipeline, takes all that much longer. Why go for such a long pipeline ?. Probably to ramp up the overall clock speed and increase the performance in computational activities such as media encoding. Intel reckons that the branch prediction unit, responsible for guesstimates what will be required next, has been improved. It needs to be, too. Bottom line - lots of MHz but perhaps not as much performance as we had anticipated

Greater levels of on-chip cache

The 31-stage pipeline will undoubtedly be a performance inhibitor. Intel knows it. The Northwood benefitted from an increase in on-chip storage cache. The Prescott now features 1MB L2 cache with 8-way associativity and 16kb of L1 data cache, doubling the Northwood's L1 execution cache, the handy portion of die where already-decoded instructions are kept (taking the decode stage out of the loop), remains at the Northwood's 12kb level. A gross generalisation here. Processors are fast, system memory is slow. Keeping required data on cache greatly benefits performance, especially in situations where it will be re-used. That's one reason why the Pentium 4 Extreme Edition is so good at gaming. At a guess, the Prescott should be quite good at gaming. The added cache has boosted the transistor count to a lofty 125 million. Die size has increased to 112mm², which is still smaller than the Northwood's. 0.09-micron processing has its advantages.

SSE3

CPU and software engineers can often work in collaboration to increase overall performance and efficiency. One method was to use special CPU registers that could process multiple data with a single instruction, thereby using the CPU's resources to the fullest. This approach became known as SIMD (Single Instruction, Multiple Data) Efficiency rose because the CPU was doing more work at a given speed, but the over-riding problem was ensuring that the CPU knew exactly what kind of data could be used in these special, performance-boosting registers; code had to be specially written for it. The Pentium 4 Northwood currently benefits from the use of optimised data with MMX, SSE, and SSE2 (Streaming SIMD Extension 2) compliance. SSE2 introduced 144 new commands and 128-bit registers. 128-bit was useful for calculations that required a high degree of accuracy - using 64-bit floating numbers, for instance. You'll note that applications can benefit enormously from careful and studied SIMD compilation. It's easy to see why. That's why developers of professional image-editing programs, which require extremely high levels of accuracy, optimise code thoroughly. The Athlon 64 is now the recipient of SSE2.

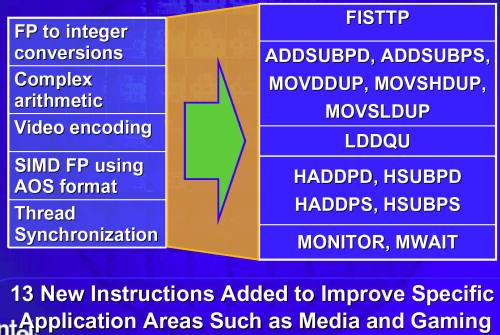

Intel has sought to refine the area of SIMD technology further. The Prescott receives 13 new instructions that, if optimsed for, will further boost performance in gaming and certain media scenarios. Intel calls it SSE3. It is outside the scope of this review to delve into intense detail here, but SSE3 appears to be the developers' friend.

Instructions with mathematical connotations. The main thought to take away from SSE3 is simplification of the labourious SIMD compilation process. The left-hand side attempts to explain the benefits accruing from the 13 new instructions listed on the right. SSE3 is just an evolution of SSE2. Nothing spectacular in itself, but it will open the door for more and easier SIMD optimisation, which is clearly good. It's a shame that the Prescott doesn't go further and extend the Northwood's 8 general-purpose registers. That would make developers' work that much easier, too. Even the Athlon 64, in 64-bit mode, can use 16 GP registers.

Improved Hyper-Threading

Hyper-Threading is good. Hyper-Threading is downright lovely, in fact. The ability to execute more than one thread and keep those execution units busy is a sure-fire way of increasing efficiency without having to increase clock speed. That's contingent upon having an OS that's aware of how to use a logical processor correctly. Windows 98 will just ignore it. Hyper-Threading works best when two pieces of software that use different execution units are run concurrently. There's always problems of scheduling and thread overlapping, but HT Tech works. Friends of mine have been wowed by Athlon 64 performance, but have consequently missed that oh-so-smooth feeling that HT provides.

For a clue as to how the Prescott has been able to increase the efficiency of HT tech. a touch more, have another look at the 13 new SSE3 instructions, especially MONITOR and MWAIT. These add to the quality of Hyper-Threading by increasing its scheduling and execution efficiency. The stumbling block is that software will have to be recompiled to take advantage of the instructions. The Prescott is better at Hyper-Threading than the Northwood, but it's not because of MONITOR and MWAIT. It has more to do with the increased cache size, which keeps the CPU fed with plenty of instructions.

Summary

Does the Prescott have enough going for it, on a theoretical level, to be worthy of being the Northwood's heir ?. 90nm process technology, greater levels of on-chip cache, and SSE3's ability to make SIMD implementation easier and more productive are all good points. The longer pipeline, however, may well see the Prescott perform slower than the present Northwood. It's a calculated gamble. Intel needs to raise the frequencies to 5GHz and beyond. The primary method of lengthening the pipeline may erode the other performance attributes of the new CPU. We'll soon find out.