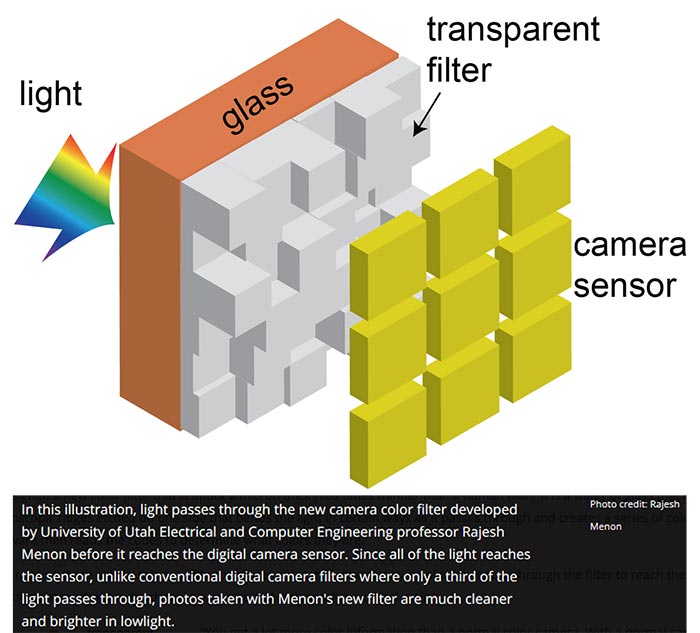

Engineers at the University of Utah have developed a new camera filter to facilitate sharper, brighter image capture in low light conditions. Traditional digital cameras use a Bayer filter to allow in red, blue and green light but in doing so only about a third of the light gets through to the sensor. The new colour filter reads 25 shades, letting a lot more light through and producing a much more natural range of hues in your photos. The new single micron thick filter requires new software but is thinner and less complicated to make than current filters.

Professor Rajesh Menon, above, is the leading engineer behind the new filter development and worked with doctoral student Peng Wang. Menon's new filter can be used with any kind of camera but he says that it was made specifically for smartphones.

Many people take issue with smartphone camera performance in low light conditions. Recently there have been advancements applied to smartphone camera tech, using OIS and bigger sensors and so on to improve low light performance but more can, of course, be done.

Traditionally a filter sits above the camera sensor to provide just the three primary colours to the sensor (RGB). These narrow divisions of incoming light mean that much of it isn't used. "If you think about it, this is a very inefficient way to get colour because you’re absorbing two thirds of the light coming in," Menon says. "But this is how it's been done since the 1970s. So for the last 40 years, not much has changed in this technology."

The new colour filter hardware is a wafer if glass about a micron thick. The glass is etched with precise diffraction patterns. Instead of reading strict RGB values the sensor below is programmed to decode 25 hues. "It's not only better under lowlight conditions but it's a more accurate representation of colour," explains Menon.

The future of this development looks pretty good as it can be "cheaper to implement in the manufacturing process because it is simpler to build as opposed to current filters, which require three steps to produce a filter that reads red, blue and green light". Prof Menon hopes to commercialise the new colour filter tech and has set up a company, Lumos Imaging, to do so. Several large electronics and camera companies are in negotiations to bring this tech to market. It is expected to appear in the first devices within approx three years.